OWASP's Updated Top 10 LLM Includes Vector and Embedding Weaknesses

The Update Looks Beyond Models to the Whole AI Stack

OWASP is an important organization whose sole job is to help applications be secure. They describe themselves like this:

“The Open Worldwide Application Security Project (OWASP) is a nonprofit foundation that works to improve the security of software.”

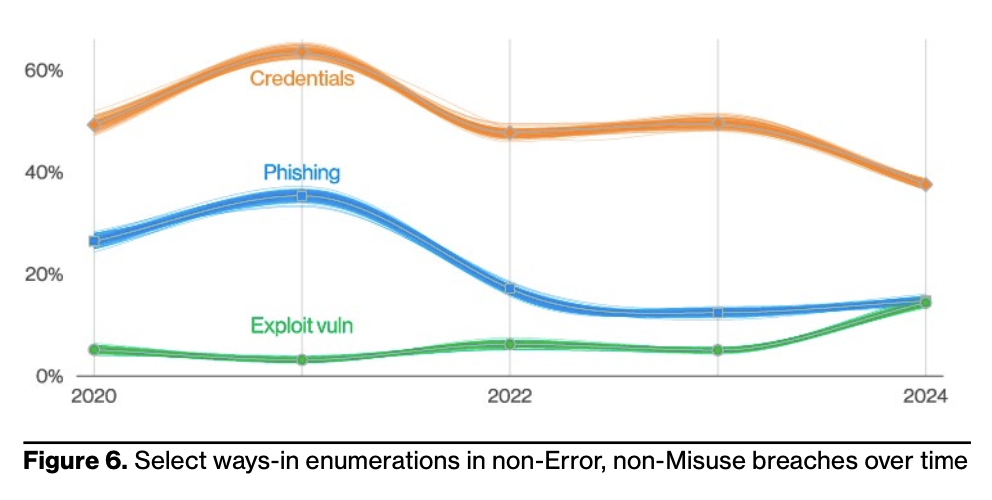

This is desperately needed because there’s a lack of standards and government regulations pertaining to AppSec. Ultimately, problems with software applications and libraries are tied for second place for root causes of breaches (after stolen credentials) according to the Verizon 2024 Data Breach Investigations report. That’s up 180% from the previous year.

The original OWASP Top 10 list has for years been the gold standard of problems that security engineers should focus on eradicating from their code. Instead of an infinite list of possible issues, the idea is to help security teams focus on the problems that matter most in modern software.

“OWASP Top 10 for LLMs” v1.0

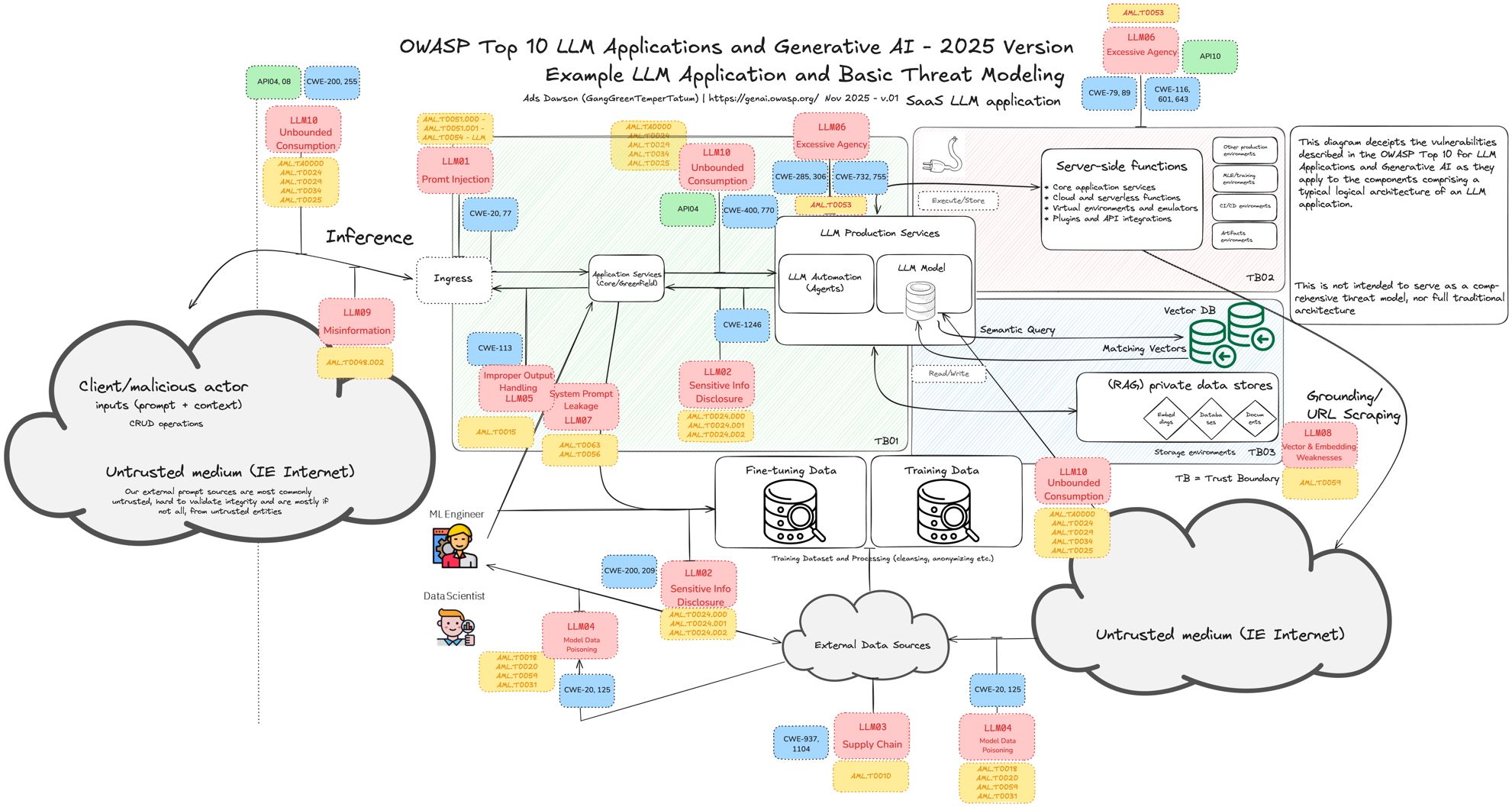

Since ChatGPT took the world by storm, OWASP has produced a “Top 10 for LLM Applications” list to focus organizations on the biggest security problems when using these AI tools.

The initial v1.0 list from 2023 was heavily focused on attacks against the models, which only applied to companies who were creating the large language models and wasn’t a concern of companies using them. For example, four of the ten were Training Data Poisoning, Model Denial of Service, Sensitive Information Disclosure (specific to extracting training data from models), and Model Theft. Of the remaining six, two were about using LLM outputs insecurely (Insecure Plugin Design and Excessive Agency) and one was about relying on LLM outputs (Overreliance).

For those of us studying practical attacks on LLM applications, it felt like a lot was being missed. RAG workflows, for example, introduce a multitude of risks and vulnerabilities into chat apps, but nothing in the original LLM Top 10 addressed this.

“OWASP Top 10 for LLM Applications 2025” aka v2.0

All of that has changed with version 2.0 of the list, released this month and officially titled, “OWASP Top 10 for LLM Applications 2025”.

The introduction to the paper detailing v2.0 talks about what’s new, saying:

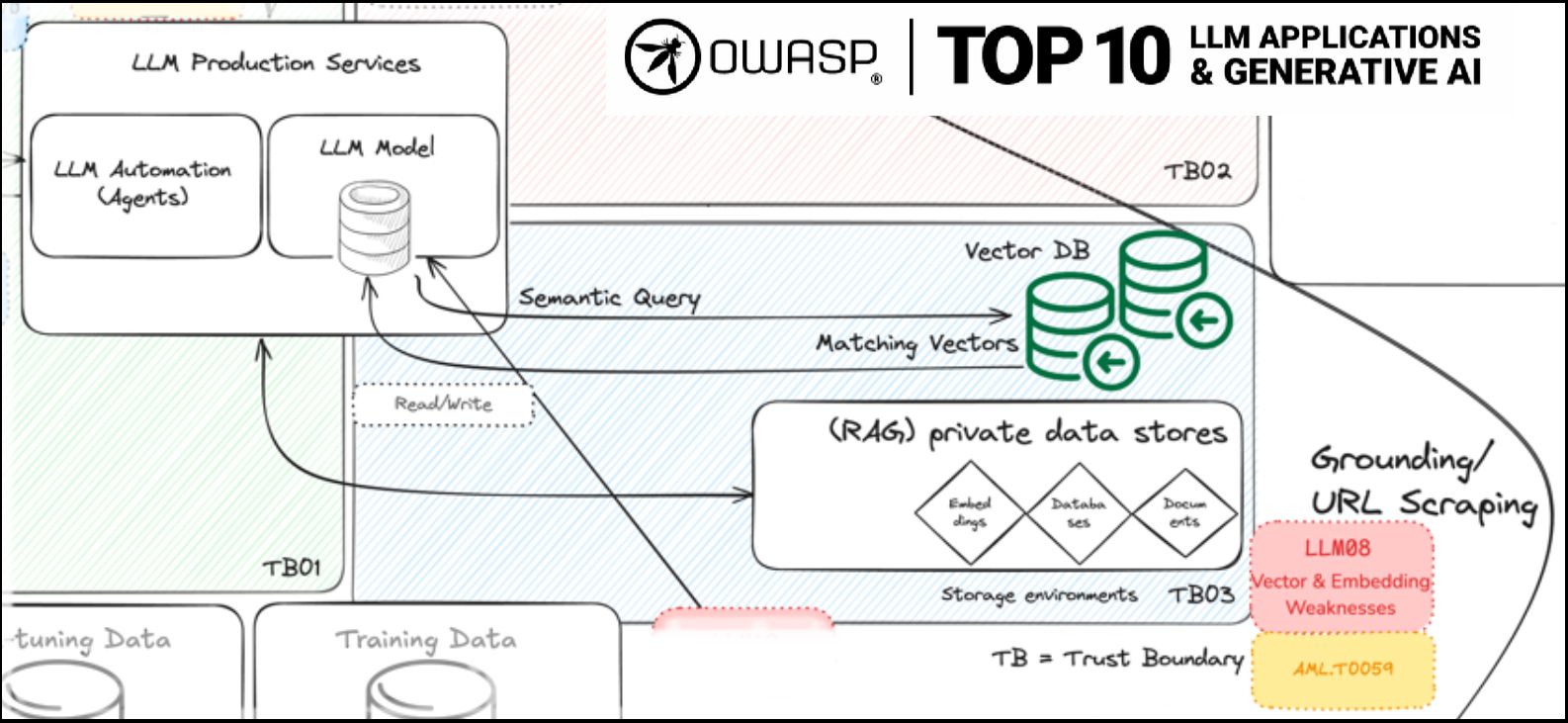

The 2025 list reflects a better understanding of existing risks and introduces critical updates on how LLMs are used in real-world applications today. … The Vector and Embeddings entry responds to the community’s requests for guidance on securing Retrieval-Augmented Generation (RAG) and other embedding-based methods, now core practices for grounding model outputs.

In other words, this new revision looks not just at the models, but at how models are used, how prompts are influenced or stolen, and the weaknesses in these systems in practical use.

For us here at IronCore Labs, this is incredibly validating because we’ve been trying to educate people for over a year on the risks in developing systems that use LLMs. We’ve talked about many of these problems (not just the ones we solve), and we’re glad to see that important things we’ve been talking about are captured in the new Top 10 list, though we go deeper in our own white papers.

The ultimate guide to AI security

Infographics and guidance on handling key AI security risks and understanding vulnerabilities. 61% of companies use AI, but few secure it. This white paper covers the key AI risks being overlooked from LLMs to RAG.

A huge congratulations and thank you to the project leads who worked tirelessly to put this together and worked with the community to reach consensus on the biggest threats.

LLM08: Vector and Embedding Weaknesses

One of the most important new risks in the list is LLM08: Vector and Embedding Weaknesses. We hope a much wider audience will now become aware of just how critical these components are.

Vectors and embeddings vulnerabilities present significant security risks in systems utilizing Retrieval Augmented Generation (RAG) with Large Language Models (LLMs).

Unfortunately, the list doesn’t go into detail on the specific harms, the sheer amount of insecure and unmonitored shadow data, nor on the ways this can go wrong. That’s okay: the job of the OWASP Top 10 is to shine a light on the risks, not build blueprints for the ways these rapidly changing risks can be exploited nor ways to mitigate the risks.

LLM08 is an important one for anyone who uses vector search, vector embeddings, or RAG workflows, which is nearly everyone who uses private data together with LLMs.

Mitigating the LLM08 Risks

Companies who are building software responsibly and who seek to build secure and responsible AI solutions will need to assemble a suite of tools to mitigate issues, but one of the easiest things that can be done, with little penalty on performance and reasonable cost, is to simply encrypt all the vectors. With IronCore, it doesn’t matter where the vectors are stored – in MongoDB, in Pinecone, in Elasticsearch – we cover any storage so long as the developer can access the vectors before they’re stored.

When you encrypt vectors, you stop embedding inversion attacks and you gain application-layer encryption (ALE), which is often a requirement for sensitive data, for example with PCIv4.

Companies who care about security will want to go through the entire Top 10 list and see what they can manage and mitigate and then pursue those options, starting with the ones that are easiest and have the least impact on performance and cost. If you’re one of these companies, we’d love to talk with you about your needs and we recommend you check out our ultimate guide to AI security to get a sense of what best practices around AI AppSec should be.