Secure Design Principles Series Part One

This blog post series is based on a talk that I give about designing and building systems with security in mind. The title of that talk is, ”Stop Expecting Magic Fairy Dust: Make Apps Secure by Design.”

In the book, ”Secure by Design,” the authors tell a story about a bank heist that took place in Sweden in 1854. The heist was notable because it was the largest bank robbery in history until The Great Train Robbery in America in the 1960’s.

](https://ironcorelabs.com/cdn-cgi/image/metadata=copyright,format=auto,onerror=redirect,quality=100,fit=scale-down/images/blog/1____NzbUDyRhxjN23Hlfw071Q.jpeg) Image courtesy of Wikimedia user Thuresson

Image courtesy of Wikimedia user Thuresson

The Östgöta Bank invested in a state of the art lock for its safe. This lock was “unpickable” and considered extremely secure. The vault itself was inside a locked building.

Unfortunately for the bank, its employees kept the key to the building on a hidden hook outside as a convenience. The robbers discovered the hidden key and used it to enter the bank in the middle of the night. Once inside, they went straight for the vault.

The vault had an unpickable lock, but the vault’s hinges were on the outside of the door, and they weren’t reinforced. So the robbers hammered off the hinges and bypassed the fancy lock entirely.

It turns out a pick-proof lock does not make a secure system.

Lessons Learned

It turns out a pick-proof lock does not make a secure system. The same thing happens all the time in software development. Adding security features, such as two-factor authentication, is important, but reducing security to a set of features is a shortcut to catastrophe.

The only meaningful way to build a secure system is to scrutinize security implications during the design of every single feature, whether that feature is security-specific or not.

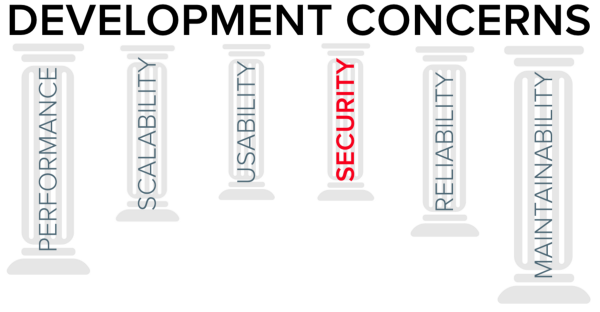

Security is the most overlooked pillar of modern apps

Security is a concern that must be prioritized across the board. Other cross-cutting design concerns are Performance, Scalability, Usability, Reliability, and Maintainability. Of these, Security is the most overlooked pillar of modern apps. The consequences of this problem can be seen in the daily deluge of security breaches and vulnerability disclosures.

When we talk about security concerns, we primarily mean three things: Confidentiality (data can only be seen by authorized users), Integrity (data can only be changed by authorized users), and Availability (malicious users can’t crash a program intentionally or take down a service). Availability has some overlap with Reliability, but in this context, it assumes a malicious party that wants to take the system down whereas Reliability typically focuses on potential failures of networks, servers, disks, power, etc. and other more natural events.

Even requirements set by product managers should consider security as a fundamental consideration. For example, product managers often create user personas around features, like Jane User, who is trying to accomplish something using the application. But few product managers add malicious personas to the model. Where is the Snowden persona?

The IT Department’s Magic Fairy Dust

In most organizations, the security budget goes to IT. Typically the CIO or CISO controls this budget. Consequently, most security solutions are geared to the problems in the IT domain, like the network. But firewalls, anti-virus, intrusion detection, etc., sit outside of applications. From that vantage point, they can only do so much.

Application bugs and security flaws can’t be fixed without changes to the application code. However secure a network perimeter may be, it always lets some traffic through. If traffic didn’t need to flow through the perimeter, then there would be no need for external connections in the first place.

Alas, in the real world, there’s no such thing as a perfectly secure perimeter.

In a perfect world, the magic security solutions sitting on the network would detect and thwart all attacks, and it wouldn’t matter what vulnerabilities were buried in an application’s code. Alas, in the real world, there’s no such thing as a perfectly secure perimeter.

Similarly, if application developers could write perfectly secure code, it would hardly matter if perimeter security solutions were in place. Unfortunately, even if a perfect developer existed, and she could write bug-free code 100% of the time, she would still depend on third-party libraries, frameworks, operating systems, network infrastructure, and more. Every one of these dependencies inevitably has security flaws of their own.

We need both strong perimeters and secure applications. IT must assume that apps running in their infrastructure are vulnerable and that assumption will inevitably be correct. Likewise, developers must assume that the network is compromised and that unauthorized users have unfettered access. That, too, is likely a correct assumption.

Designing Secure Features

A principle is a rule or belief that governs behavior. Alternately, it’s a quality or essence of something. This is an apt way to think about design principles. They should be fundamental and essential, governing all requirements, design, code, and maintenance of software systems.

There are different lists of secure design principles. For example, NIST has 33 of them, and OWASP has 10 in their development guide.

Unfortunately, many of these design principles are presented in a way that feels tedious and unintuitive.

For example, OWASP has the principle of “Psychological Acceptability.” From their dev guide, this means:

A security principle that aims at maximizing the usage and adoption of the security functionality in the software by ensuring that the security functionality is easy to use and at the same time transparent to the user.

This is a great principle, but it can be summed up simply with one word: “Usability.” We’ll talk later about this principle and about why usability and security are not opposites, but in many cases, usability leads to much stronger security.

In the rest of this series, we’ll walk through IronCore’s top ten design principles, all of which relate closely to OWASP principles, NIST principles, or both, but which we hope have higher impact through more memorable names and familiar references. We’ll work through each of these principles using stories that illustrate what happens when the principles aren’t followed.

Ultimately, we hope to drive secure design practices deeper into more organizations. Help us by sharing this article and following the rest of the series.

Coming Soon: Secure Design Principles Series Part Two: Layers