AppSec Fails and the Incredible Durability of Application Vulnerabilities

Bugs, Bugs, Bugs

Programmers make mistakes. They’re humans and they’re imperfect; bugs are to be expected. In well-tested and widely used applications, the bugs that make it to production are fewer and less impactful. Even so, bugs escape automated tests, QA by humans, code scanners, and audits. Some of those that are missed will get found and reported by customers or maybe by bug bounty hunters — the security testers that let you know if they find security problems in your code in exchange for rewards.

With a very good quality program and all of these measures, inevitably, bugs still escape. Unfortunately, some of those will be critical security vulnerabilities.

Three Case Studies

Updated 2021-12-10 to add case study three.

Case Study 1: Confluence, an Atlassian Product

Let’s look at two case studies. The first is Confluence. In August 2021, Atlassian disclosed CVE-2021-26084, an OGNL injection vulnerability.

If you’re like me and you’d never heard of Object-Graph Navigation Language (OGNL), all you need to know is it’s a language for manipulating arbitrary Java objects. And it allows the execution of arbitrary Java code and system execution.

This particular vulnerability was nasty because of the ease with which it could be exploited. An attacker simply adds an escape character into a URL followed by any OGNL query desired. The attacker doesn’t even have to be logged in.

To call this a nightmare scenario is to undersell it, and I feel bad for Atlassian. They put a lot of attention to their security, have had many auditors hunt for issues, and yet this particular issue, which is as severe an issue and as easy an exploit as it gets, gives the attacker full superpowers over the system. And the problem was first introduced into their code in version 4.0. They’re now on version 7.12.

The initial vulnerable code was introduced in 2011 and that means this issue has been exploitable for 10 years.

This isn’t a knock against them. It’s likely that undiscovered issues like this exist all across the industry at this very moment. Some of them have likely been sold to Governments and others in zero-day markets.

Case Study 2: Mozilla NSS

Mozilla’s cross-platform cryptography code, Network Security Services or NSS for short, is used widely across the industry. As a fundamental part of security on the Internet, it’s received a ton of scrutiny. And Mozilla doesn’t mess around with this library. They have automated fuzz testing, manual audits and analysis, code scans by scanners that should find vulnerabilities, and much more.

Yet recently a security researcher discovered a critical issue in the NSS code.

Many things are notable about this discovery, but the one that stands out the most is that this is a trivial, textbook buffer overflow. Everything that Mozilla does to vet the security of this library from the fuzzing to the code scanning should have caught this issue. The vulnerability is both simple and catastrophic.

In short, the issue is that outside input that can be edited by a hacker is passed through into memory without anything sanity checking it to make sure it doesn’t exceed the allocated memory size. This lets the attacker overwrite arbitrary memory including a function pointer, which means they can point to arbitrary code and run anything they want to on a remote machine.

So how long has this bug been lurking? The issue was first introduced in 2003, though other factors mitigated the issue until 2012. So this is a 10- to 18-year-old bug in code that is heavily scrutinized for security. Yikes.

Bonus Case Study 3: Log4J

UPDATED 2021-12-10: A day after publishing this blog, a new blockbuster vulnerability hit that underscores the point, so we’ve added it as a third case study.

Early this morning, a major bug was reported in a foundational library, log4j, used widely across the Internet (Cloudflare, Apple, Microsoft, Steam, and more). As with the other two case studies, exploiting this issue is trivial and it gives attackers the ability to execute arbitrary code.

The bug in question has been in the code since version 2.0, which was released in July of 2014. So this issue has been a problem for 7.5 years. And this is another widely used and widely scrutinized library.

The Industry Stats

Alright, sometimes bad things lurk in code for long periods of time, but is that normal?

Yes.

According to Github’s security report, the average length of time a security vulnerability is in code before being discovered is 4 years. And it takes an average of 4.4 weeks to get it fixed and then an additional 33 to 46 days for developers to patch their software, on average.

That means attackers have years to find and exploit the issues.

Not good.

The Antidote is AppSec

Application Security (AppSec) is what is supposed to counteract these problems. In practice, though, AppSec can at best minimize the chances of problems. And it’s critically important that application developers do this. An ounce of prevention is worth a pound of cure.

Here are a few of the things that a good AppSec program does:

- Patch management: keep dependencies and environments up-to-date with security fixes.

- Dependency vetting: formal scrutiny of toolchain and environment dependencies.

- Secure development lifecycle (SDL): everything from developer training to security-related architecture reviews to security-based QA.

- Scanning: code scans and external penetration tests.

- Security functionality: security or privacy-related features such as single sign-on and multi-factor authentication.

- Application-layer encryption: reduces the likelihood of a breach or a vulnerability turning into a catastrophic data loss event.

Application-layer Encryption

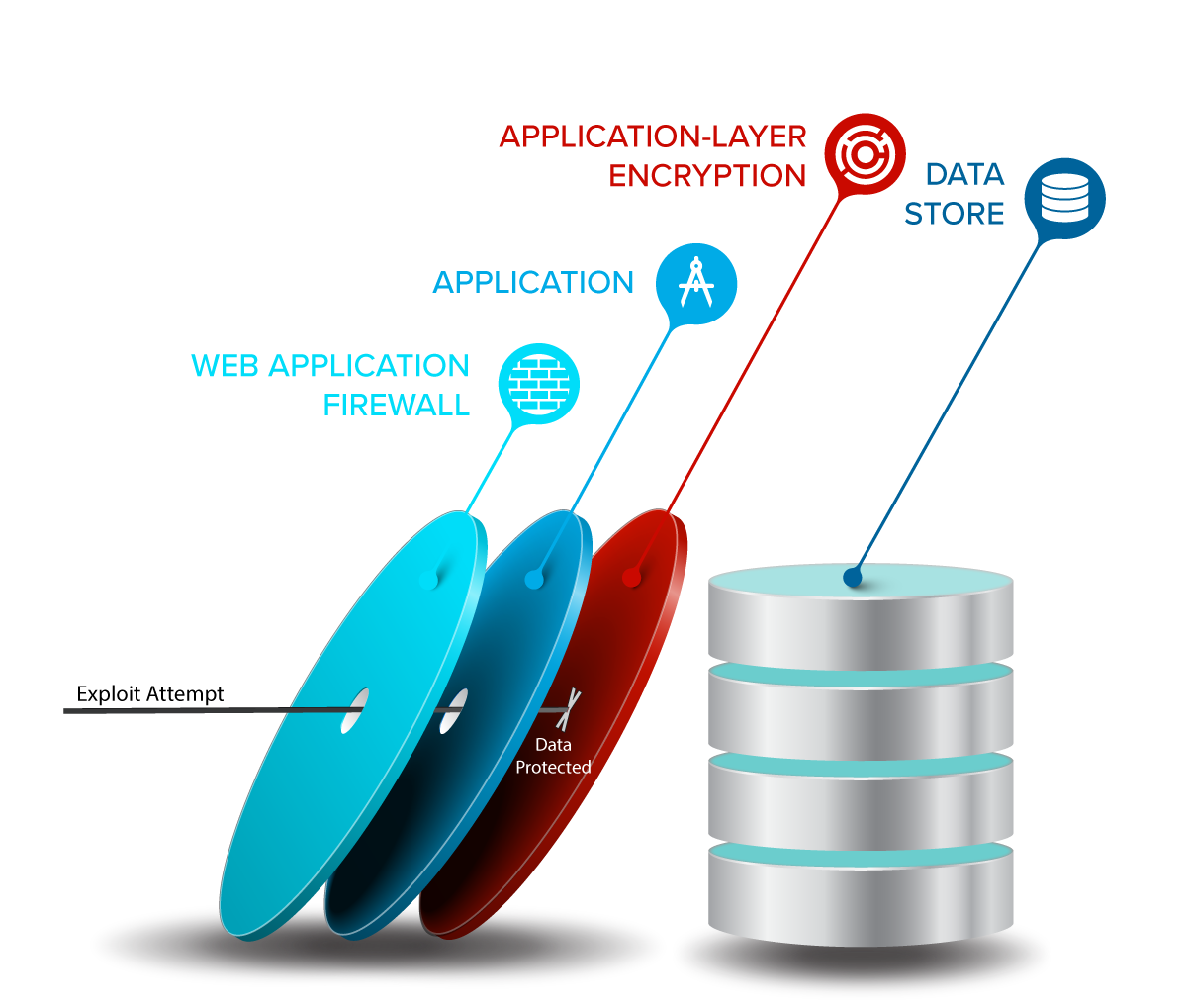

That last one is pretty important because it’s the only one that answers the question of, “what if something goes wrong and a vulnerability slips through?” It’s the only thing that adds a layer between an application and its data to protect the data in case of injection attacks, network breaches, and other issues.

Application-layer encryption is often overlooked and frequently disregarded, but it’s a critical part of AppSec that teams need to pay attention to.

Robust security requires a defense-in-depth approach that doesn’t rely on magic firewalls that can be evaded and can’t know or understand how to defend against application-specific logic errors.

AppSec without layers is like cereal without milk: better than nothing, but sadly inadequate.

Is your AppSec program complete? If not, you should look into products like IronCore’s SaaS Shield, which can help.