The Trouble With FIPS: Encryption Standards Need a Makeover

Encryption Standards

If we look at the landscape of cryptography that’s actually in use worldwide, we find that the tools people are using to secure their communications and their data are almost unchanged from 25 years ago. And there’s one simple reason: everyone has been trained to wait for something to become a cryptographic standard before using it.

That’s theoretically a good thing. Snake-oil cryptography is an endemic problem, and much of the time the purveyors don’t even know they’re selling snake oil.

Ideally, the cryptography community would regularly rally together to nominate and vote on the currently most secure, efficient, and safe algorithms (perhaps even implementations?). These would then be subject to extra scrutiny and attacks for some period, and the ones still standing could become standards that users of cryptography would know they could trust.

Unfortunately, that just isn’t how things work.

The Federal Information Processing Standard (FIPS)

In the U.S., it all comes down to the Federal Information Processing Standard (FIPS 140). The FIPS document is produced and maintained by the National Institute of Standards (NIST), and it dictates the security that is required to be used by Federal agencies and departments. Government subcontractors must adhere to FIPS as well, and some financial and healthcare laws point to FIPS as a requirement for some data.

FIPS 140 is pretty broad in its requirements and covers many things, but among them, it has a list of approved cryptographic “modules” (algorithms and parameters, basically). These are listed in FIPS 140 Annex A with some calls out to other documents, which we’ll come to in a minute. This Annex A list is where we’ll focus our attention.

The approved modules include:

- Symmetric Ciphers

- AES (CBC, CCM, CFB, CTR, ECB, GCM, XTS) minimum 128-bit, maximum 256-bit keys

- 3DES (but not for much longer)

- Asymmetric

- Elliptic-Curve Cryptography (P-192, P-224, P-256, P-521, B-163, B-233, B-283, B-409, B-571, K-163, K-233, K-283, K-409, K-571)

- RSA (minimum 2048-bit keys)

For AES, they only allow specific modes and key sizes. For elliptic curves, they require specific curves with specific parameters (such as P-256).

Interestingly, the algorithms that are blessed by NIST in FIPS-140 are not required for use on classified data. In fact, they’re only to be used for unclassified data. And three letter intelligence agencies are exempted from having to use them. Which makes you wonder… 🤔

But here’s the thing: the Annex A selections are old. They’ve hardly changed in years.

Non-standard Cryptography

Most cryptographic innovations over the past 30 years are “non-standard,” meaning they aren’t blessed by the U.S. Government via FIPS. And people are afraid of non-standard cryptography. This is because we’ve rightfully trained them to be distrustful of anything that isn’t widely used and tested. It’s very hard to get cryptographic protocols “right” and even harder to get the implementations “right” (for some definition of “right” that evolves over time as new attacks are discovered).

But there have been those who have worked very hard to build highly secure systems with lots of sunshine, subject to plenty of scrutiny, and that the wider community regards as safe. To bring something like this to market, it would have to meet at least these criteria:

- Based on widely studied, peer-reviewed, peer-attacked, published algorithms and protocols.

- Utilizing vetted parameters (inputs to the algorithms).

- Be open source – no hidden code and implementations.

- Have that code audited by a top-tier crypto auditor.

- Ideally, be implemented in constant-time so it isn’t subject to timing attacks or cache attacks.

Even then, there’s no guarantee any cryptography is truly secure. What we believe to be secure today can be broken tomorrow by some novel attack. If you look back to the ’90s, most of the things we encrypted back then can be cracked now. That’s due to flaws in implementations, key sizes that weren’t large enough to keep up with hardware advances, and new attacks. It’s why we aren’t using Triple-DES as the “Data Encryption Standard” anymore (well, FIPS still allows it, but that’s another story).

But the bigger point here is that there are some extremely good, well vetted, and widely trusted algorithms and implementations, such as Curve25519, that are considered “non-standard” and which many companies won’t touch for that reason. Anything innovative, like proxy re-encryption (full disclosure: my company has built open-source, audited PRE libraries), is nowhere to be found in the standards.

Quantum Computing and the PQC Competition

Today we live in an era of uncertainty regarding the future of cryptography. Public key cryptography like RSA and Elliptic Curve Cryptography (ECC) is, in theory, breakable by a quantum computer of sufficient power. Quantum computers exist today, but making them powerful enough to crack cryptographic codes has proven to be exceptionally challenging. As far as we know, no one has succeeded. But then again, if some Government did succeed, they probably wouldn’t publish that fact to the world. And regardless, progress is being made and at some point, all of the public key NIST standards could become worthless.

Post-quantum cryptography (PQC) is the study of algorithms that are theoretically strong against standard computer attacks as well as quantum computer attacks. A lot of learning is happening in this area right now and a ton of investment from both industry and acadamia is focusing a lot of sharp minds on the problem. And NIST is hosting a competition to pick the best new public key approach that is resistant to quantum computers. If all goes as planned, the winner will be the first new algorithm to become a standard in over twenty years.

But here’s the thing: the algorithm that wins that competition will inevitably be subject to many more attacks than it ever has been, and it may well be susceptible to non-quantum attacks. When the competition crowns a winner, the prudent among us will choose to layer it with more tried and trusted algorithms in order to raise the bar for an attack.

The Innovation Gap

One sad thing about cryptography is that most of the innovations in cryptography have struggled to reach escape velocity out of academia. Proxy re-encryption is a great example of this. It was first introduced in 1998, and there have been literally thousands of academic papers on the subject. Yet when we started IronCore in 2015, no one had yet attempted to commercialize it (there are now two of us and the other is focused on blockchain use cases).

But there have been many more innovations in cryptography such as multi-party computations, zero-knowledge proofs, attribute-based encryption, identity-based encryption, and more. And these have (mostly) stayed on the sidelines.

The advent of blockchain has arguably been one of the best things to ever happen to the field of cryptography. It’s generated a fire hose of capital chasing experts in the field and rewarding them and companies for turning innovations in cryptography into real-world products. I’m often a skeptic about blockchain-based initiatives and I see it as an ecosystem that’s polluted with fraud or, to be more generous to those with good intentions, gaps between the rhetoric and reality. But whatever your view of blockchain, it’s driven a ton of investment that will ultimately make it much easier to build private and secure systems that have nothing to do with the blockchain at all.

FIPS: Feature or Bug?

So the Government is slow to change. Big deal. And, sometimes, maybe, that’s good. Entrepreneurs like me might hate it, but tried-and-true is a valid strategy. So maybe FIPS is just being conservative and that is overall a good thing for those who want to protect their data and choose to or are forced to take their cues from FIPS.

Trouble #1: The Difference Between Slow and Stopped

There have been four editions of the standard:

- FIPS 140 published in 1982

- FIPS 140-1 published in 1994 (12 years later)

- FIPS 140-2 published in 2002 (8 years later)

- FIPS 140-3 published in 2019 (17 years later)

FIPS 140-2 included the algorithms AES, 3DES, ECDH, DSA, RSA, ECC using specific curve parameters, and some primitives like SHA-1.

FIPS 140-3 includes the algorithms AES, 3DES (but for decryption only after 2023), ECDH, DSA, RSA, ECC, and SHA2.

In other words, the list has been tweaked a tiny bit here and there, but aside from the deprecation of SHA-1 and eventually 3DES, Annex A is just the same old list.

For various reasons (some mentioned below), we’ve seen a lot of alternatives come along to challenge these standards. Applied cryptographers who care about speed and security are likely to endorse Salsa20 as a symmetric cipher and the Curve25519 parameters for elliptic curve cryptography. And Curve25519 may yet become a standard, which would be a stunning breakthrough, even though it’s really just some different parameters to standard elliptic curve cryptography. And if it does make it to a standard, it should be noted that it was first introduced in 2006.

Awhile back a company called Voltage Security (now owned by Microfocus) commercialized Identity-Based Encryption (IBE), which lets you use your email address or something like that as your public key. They had some success, but they had to make a version of their software that was FIPS-compliant in which they turned off everything that was novel and interesting about their product. They used shared keys or left things unencrypted to meet the standards. and it turns out you can have unencrypted data that is FIPS compliant while encrypting it runs afoul of the standard. Their approach was well studied and vetted, but because it wasn’t on the FIPS list, they couldn’t sell it to large classes of customers. Hence the FIPS-compliant mode that wildly decreased the security of their product for those customers that turned it on.

The identity based encryption that they used leveraged an operation called a “pairing” on an elliptic curve. The standard curves would not be secure choices for this technique as there are no “pairing-friendly curves” blessed by NIST. My own company’s proxy re-encryption algorithm also relies on pairings as do a lot of zero knowledge proof approaches. Pairings have been a basic building block of cryptosystems for decades.

Voltage attempted to get a FIPS certification, and they also attempted to get a pairing-friendly curve blessed by NIST, but in the end, those attempts stalled out and died. It’s important to note that there were no known security issues with them, nor any other stated objections. The attempt was pocket vetoed by the Government through inaction.

Trouble #2: The “Backdoor” Perception

This all begs the question: why are we holding the status quo at 1990’s levels? Is it just an entrenched conservatism? A feeling that what we have is good enough? A desire for simplicity? Simple bureaucracy and funding challenges? It may be any or all of these, but there is another possible explanation that’s worth examining.

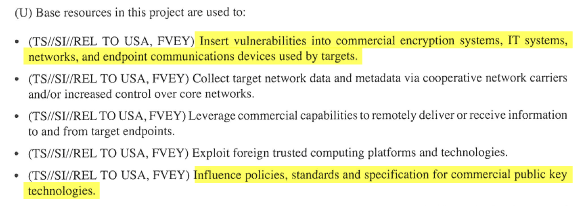

We know from the 2013 Snowden leaks that the U.S. had a $250 million per year program, called BullRun, whose goal was to defeat encryption.

The NSA worked with vendors to change their products, sometimes paying them to do so and threatening to stop government contracts if they balked. The leaked budget request states that as part of their mission they will: “influence policies, standards and specification for commercial public key technologies.” To weaken them. They also said they would, “insert vulnerabilities into commercial encryption systems, IT systems, networks, and endpoint communications devices used by targets.”

In at least one case, they seem to have succeeded. That’s the case of the pseudo-random number generator Dual_EC_DRBG. Whether they designed the flaws deliberately or just saw them and opportunistically pushed for Dual_EC_DRBG to become a standard, we don’t know. But the NSA found great success in effectively backdooring huge swaths of infrastructure by adding that flawed random number generation algorithm to the standards. And then pressuring companies to use it.

Large security companies caught in the debacle included RSA and Juniper Networks, both of whom made Dual_EC_DRBG their default random number generator. In the case of RSA, they received compensation for doing so. It also turned up in products from Microsoft, Cisco, and even in OpenSSL.

Around the same time, the NSA was pushing to standardize a pair of block ciphers called Simon and Speck. Cryptanalysts found a number of problems with them, and the NSA was accused of trying to yet again push backdoored algorithms on the rest of the world. The NSA was also accused of strong-arming cryptographers and standards committees to push it through. The NSA ultimately withdrew their submissions. Perhaps those algorithms, which were intended for lightweight IoT type devices, were fixable, but by this time the cryptographic community was feeling a bit… distrustful.

With that context, a number of cryptographers went back to re-examine the existing standards. Tanje Lange and Dan Bernstein created the SafeCurves project to evaluate the security of the parameters used in widely-adopted curves including the recommended FIPS curves like P-256. Stunningly, none of the NIST curves meet the “safe” threshold as defined by Lange and Bernstein. They challenged where the curve parameters even came from and showed that the claimed reasons (all variations on “efficiency”) were shown to be false. So these curves have very specific numbers in them with no reasonable explanation as to how or why those numbers were chosen.

Could the NSA have some way to exploit the FIPS curves that the rest of us don’t know? Is there a backdoor in P-256 or for that matter in AES? We don’t know. But it is suspicious. Which is why I’ll call this a problem with perception.

And it begs the question: is the resistance to anything new getting added to the FIPS list because the new capabilities are less advantageous to U.S. espionage capabilities?

A Digression on Ethics and Cryptography

Years ago I managed a medium-sized apartment building. One day I got a knock on my door and it was the police. They were there in a friendly capacity to let me know that a tenant was dealing drugs to children out of their apartment. So I said, “what are you telling me for? Arrest him!” And they said they couldn’t make the children testify. This left me in an impossible position, because I didn’t have any more grounds to evict than they did to arrest.

But here’s the thing: the apartment wasn’t dealing the drugs. Even drug dealers have the right to housing. While the police were insinuating that I was culpable here – that I was responsible for the criminal behavior of the tenant – it just wasn’t the case. We did eventually evict this tenant, but it was for cause and it was not related to the dealing.

Years later I would co-found an encryption company and give deep thought to how encryption can be used for good – for preserving privacy and for securing data (two things this world desperately needs more of), and for bad – like for criminal activities. But like shelter, I believe privacy to be a fundamental human right, and the fact that it can be used for “bad things” does not mean it should not be available. A hammer can be used to hit nails or to hit fingers.

But things get more interesting when you think in terms of espionage. It’s in a nation’s best interest to be able to read the communications of its adversaries. We want our Government to be good at this since it can keep us safe. From the perspective of those whose job it is to read the emails of adversaries, encryption needs to be strong enough to keep out everyone else, but not strong enough to keep them out.

If a nation has this capability, though, what is to stop it from using it as a tool of oppression to spy on its citizens? In Western countries, we think we are safe from this. And in fact, practically speaking, we probably are since we have strong court systems where legally obtained proof must be shown to convict someone of a crime. And warrant-less mass surveillance captures aren’t usable in court. But jail is not the only tool in the toolbox of an oppressive Government and threats, blackmail, etc. need to also be taken into account.

All this is to say that there are no easy answers. I expect the U.S. Government and others to work diligently at breaking and/or undermining cryptographic techniques. It’s their job. For the good of humanity and the safety and security of our economy and our citizens, though, that bar must be as high as we can make it. There are many avenues to information that don’t involve weakening our building blocks.

Where we are today is in a place where we’ve made it too easy for adversaries – be they foreign nation-states or small hacking groups – to break our software and gain unfettered access to our data. We must raise the bar, and more pervasive cryptography is an important part of the puzzle for doing that.

Moving Data Security Forward

The state of data security in the software industry is disappointing. Companies use the phrase, “encryption-at-rest” to suggest that the data they hold is well protected. But unless we’re worried about the theft of a hard drive (and we are, but that’s the very rare edge case), it isn’t. Companies aren’t protecting their data very well on their running servers. And Governments aren’t doing much better. And as we’ve seen, Governments are actively hindering companies from using better data security.

The concept of application-layer encryption is only just now catching on, but it’s far from being implemented industry-wide.

From search to data mining to machine learning, the biggest barrier to encryption is the idea that the data can’t be used once encrypted. But that’s simply not true. Innovations out of academia such as searchable encryption, fully homomorphic encryption, and zero-knowledge proofs solve those problems. But none of these things are “approved standards.” We’re hampered from using these techniques across the board because NIST hasn’t given their official blessing and they probably never will. If you want to encrypt data and still work with it, you can’t look to FIPS standards. Period.

FIPS is holding much of the industry back from adopting better security. This is an enormous problem with costs in the billions.

We can either wait for the standards to change or we can acknowledge that the standards are failing us. We need to stop being hampered by the state of the art from 20 years ago. Our current cryptographic standards are the enemy of innovation and of security. And that needs to change.