Privacy Guide to Apple Intelligence with ChatGPT

Patrick’s Privacy Practices Part 3

This is one of a series of posts on how you can protect yourself and your privacy online to the extent it’s within your power (links will be added as new parts are published):

- Part 1: Identity Theft Protection Checklist

- Part 2: Private Communications for Email and Text Messages

- Part 3: Privacy Guide to Apple Intelligence with ChatGPT (this blog)

- Part 4: Recommended Privacy Settings and Alternatives for Google, Search, and YouTube

- Part 5: Recommended Privacy Settings for iPhone and MacOS

- Part 6: Privacy and Social Media

- Part 7: Basic Security

Apple’s OpenAI ChatGPT Integration Falls Short of Privacy Expectations

This summer, Apple published details on keeping server-side AI in Apple Intelligence secure and private. We tore that apart to pick out the good and the bad (mostly good) in their plans. At the time, we believed the privacy protections applied to all of Apple Intelligence, including the ChatGPT functionality. But we got this wrong. There is no private way to use ChatGPT.

If you’re using Apple Intelligence, the good news is that your first-level queries and interactions are private. Apple uses a blend of on-device models and a custom-built confidential computing environment. This custom blend protects AI queries from a range of privacy invasions, including from Apple employees and government subpoenas.

Since we last dug into Apple’s AI privacy initiatives, they’ve published more information on the lengths they’re going to for their user’s privacy, including homomorphic encryption. They’re the only company making confidential AI options available at scale. So, big kudos to Apple for this work. However, it only applies to the core Apple Intelligence functionality and not to optional features you are prompted to turn on.

ChatGPT Extension to Apple Intelligence

In particular, I’m talking about the ChatGPT integration. By default, it’s off, but also by default, Apple prompts you to turn it on, and most people won’t understand the repercussions. Apple’s excellent AI security and privacy story does not apply to their ChatGPT extension.

ChatGPT is amazing. Despite tremendous competition from other giant tech companies, they continue to produce many of the best-performing models.

When Apple’s models cannot generate a good reply, Apple may escalate your request to OpenAI. While Apple says you are in control of what gets shared and that it will always prompt you before sharing files like images and documents, that’s not necessarily true. There’s an option to turn off these prompts in settings, and they’re also disabled temporarily if your question mentions using ChatGPT.

The bottom line is that Apple is passing along some Apple Intelligence requests to ChatGPT, sometimes with your express permission, but whenever they do so, it undermines your privacy. Apple partially anonymizes you by obscuring your IP address, but even that minimal protection is out the window if you link your ChatGPT account.

Your privacy when using Apple Intelligence hinges on your specific settings and the repeated choices you are asked to make about forwarding queries to ChatGPT. It’s a nuanced, error-prone mess where privacy leaks are more likely than not.

Apple’s Statements Around ChatGPT Privacy

Here’s what Apple says about the privacy of their ChatGPT integration in full:

If you access the ChatGPT extension without an account, only your request and attachments like documents, photos, or contents of the document are sent to ChatGPT to answer your request. OpenAI does not receive any information tied to your Apple Account. Your IP address is obscured from ChatGPT, but your general location is provided. OpenAI must process your request solely for the purpose of fulfilling it and not store your request or any responses it provides unless required under applicable laws. OpenAI also must not use your request to improve or train its models. When you are signed in, your ChatGPT account settings and OpenAI’s data privacy policies will apply.

This sounds alright, but it isn’t. Let’s say you’re editing a letter and want Apple Intelligence to help you refine it. The entire letter, including your return address and the recipient address, can be sent to OpenAI, which means that any IP address obfuscation is pointless. Although OpenAI promises not to store your queries arriving via Apple, they rescind this promise if you associate the ChatGPT extension with your OpenAI account. At that point, all anonymization, promises not to store your requests, and even promises about using your data for training, no longer apply. The settings on your ChatGPT account take precedence. And to make matters worse, OpenAI limits functionality - capabilities and the number of queries - for users who don’t log in.

This is unfortunate because it makes it easy for Apple users, who expect and believe they are getting Apple’s privacy promises, to compromise their privacy.

Recommendation: Turn Off ChatGPT Extension

I recommend allowing Apple Intelligence but turning off the ChatGPT integration. If you want to use ChatGPT, you can go there directly and be explicit about what’s being shared with them.

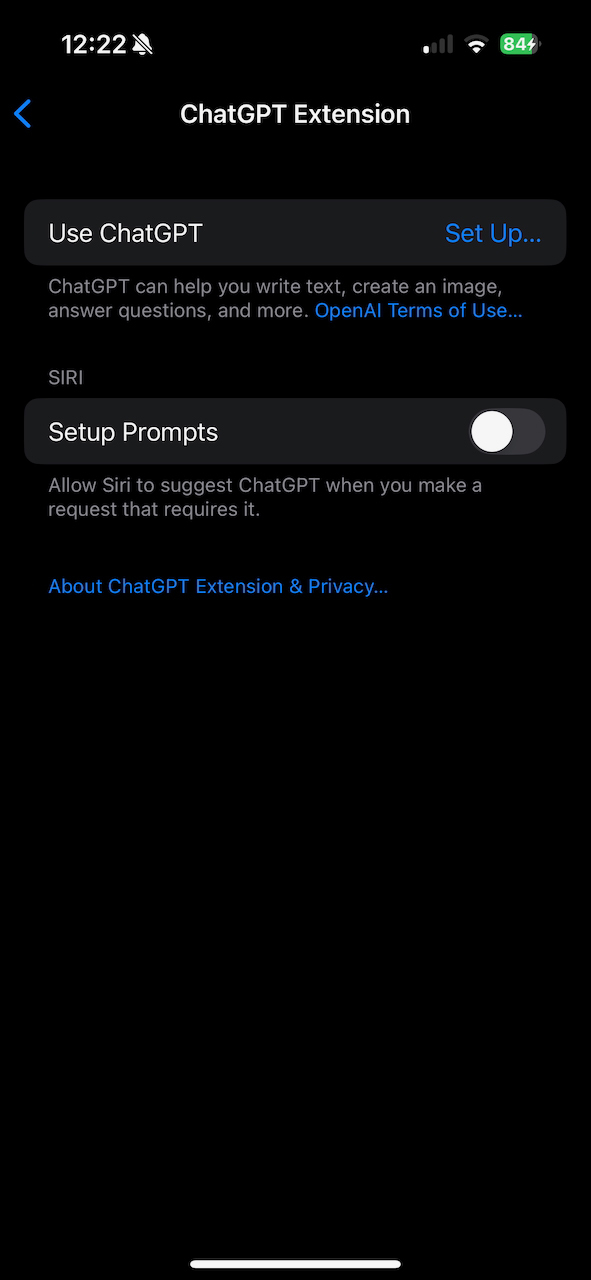

To turn off the OS-level ChatGPT integration, follow these steps on your phone (note: do the same on your Mac, too, if applicable):

- Go to

Settings - Tap

Apple Intelligence - Tap

ChatGPT - Turn off

Use ChatGPT(note: if next toUse ChatGPTit saysSet Up..., then it’s already off) - On the same screen, also turn off

Setup Prompts

Your settings screen should now look like this image:

- Turn off the Apple Intelligence ChatGPT extension

If you’re extra paranoid or the admin for other people’s phones (like children or corporate settings), you can also block ChatGPT from getting turned back on by forbidding it under Screentime.

Final Thoughts

Some may feel that the controls Apple gives – with the right settings – are good enough to manage their privacy. I think it can be managed with care, though I certainly believe it’s error-prone. I don’t think Apple should have allowed someone to link ChatGPT queries through Siri back to their account. It undermines everything they’ve been building and makes it impossible for someone like me to tell people that Apple Intelligence is safe to use.

The AI gold rush and fast adoption of some pretty great technologies is causing people to overlook the basics of privacy and security. 100% of the top 20 SaaS companies are adopting large language models and GenAI. 100% of the top security companies are doing the same. But this tech has opened us up to all kinds of new attacks, and the defenses against those attacks are in their infancy. We’ve talked elsewhere about risks with these AI systems so I won’t belabor the point here, but it’s scary. Suffice it to say that adoption is outpacing security and privacy by leaps and bounds, and this is not good.

Apple, which over the summer looked to be the one big tech company taking the responsible road, has decided to offer both private AI and not-so-private AI in a blended package that’s confusing and undermines trust with their customers. It’s terribly disappointing. 🙁