Build Your Own Application-Layer Encryption?

Non-obvious Considerations and Why It’s More Complex Than It May Seem

Application-layer encryption (ALE) is becoming a more prevalent as development teams adopt it to protect the data they hold for their customers – often at the customers’ request. The core concept is that data is encrypted before it’s stored so that someone with direct access to a data store sees just gibberish for sensitive fields.

IronCore Labs has been developing, selling, and refining ALE systems now for almost a decade. Our platform supports multi-tenant, multi-key systems that are highly secure, performant, scalable, resilient to failures, and a huge productivity boost to app developers. We layer on optional per-tenant customer-held encryption keys, data transparency, security event streaming, and other advanced security offerings at the same time.

As the popularity of ALE grows, so do the number of teams who are addressing buy vs. build decisions. This blog covers some of the lessons learned and problems solved here at IronCore with our SaaS Shield platform.

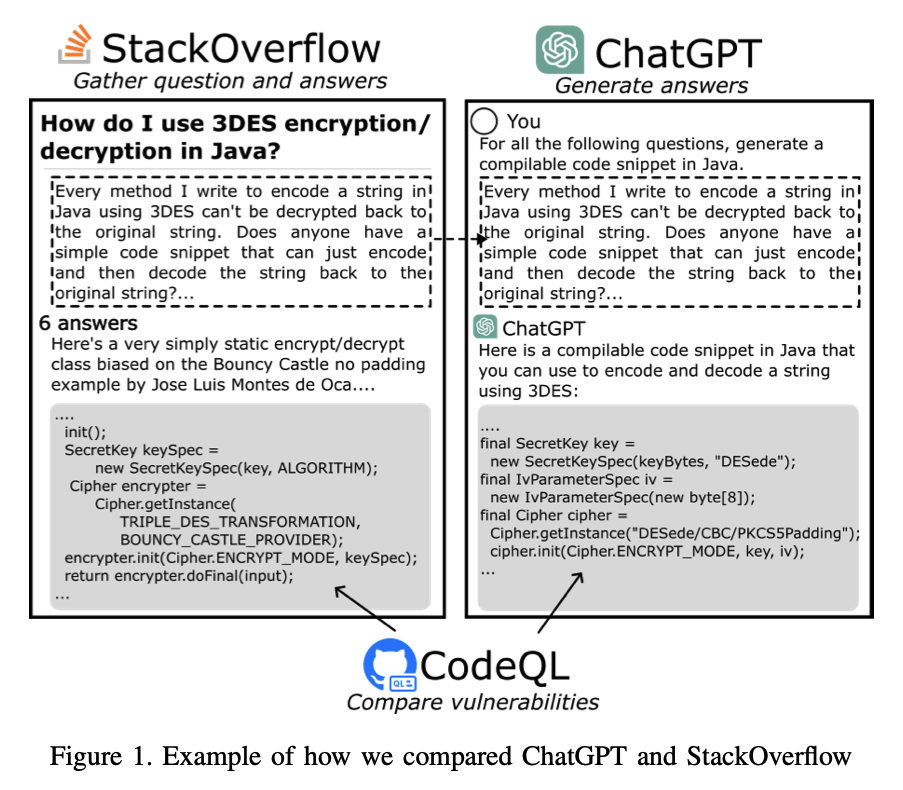

Choosing to build your own encryption solutions is risky, and the “gotchas” are often far from obvious. For many software problems, for example, a glance at StackOverflow might get you where you need to go. With cryptography, a study from a few years ago showed that 60% of code snippets on StackOverflow had security problems.

We looked for more up-to-date research and found a paper comparing ChatGPT code generation to StackOverflow answers. In that paper, out of 189 stack overflow questions with runnable code snippets as answers, and using ChatGPT to answer the same questions, StackOverflow had 83 code snippets with vulnerabilities and ChatGPT produced 87.

Encrypting data is deceptively easy. Anyone can grab a library and call a low-level encrypt() function. Done. Right? Well, maybe not. Even just calling encrypt() can get you into tons of trouble.

But designing crypto-systems that are secure and able to handle lifecycles and workflows is next level difficult. It can look easy, but security and scalability problems lurk.

You should only build crypto-systems if you have a strong foundation of knowledge. Do not build one while relying on StackOverflow or LLMs to get the tricky bits of the cryptography right for you.

Requirements for our fake app

We’re going to use a scenario based on conversations with various development teams. To set it all up, let’s outline our fictitious app and its evolving needs.

Note: any client-server app with stored data can use ALE, but to highlight complexities, we’ll make these base assumptions:

- Multi-tenant SaaS cloud app

- Each customer (tenant) has their own data without sharing across customers (typical in a business app)

- There are a number of fields that contain sensitive information such as communications between users and their email addresses

- The dev team is building inside AWS (though it could be anywhere)

- The dev team prefers to use MySQL and S3 to store data

This application already exists and uses standard infrastructure-layer transparent encryption to protect data on hard drives (when servers are off or disks are removed) and https, but recently customers have been agitating for better data security. In particular, they want the sensitive fields of their data application-layer encrypted, and they want their data to use a different key from anyone else’s. Some of the customers making these requests would also like to be able to hold their own keys so they can manage the keys under their own policies and control things like rotation schedules, algorithm and key size choices.

Customers want this higher level of protection because

- they’re reviewing security across all of their vendors to reduce their risks now that costs for breaches are going up, and

- they’re concerned about your employees peeking at data that could be used for insider trading or other purposes.

A few international customers are also talking about data sovereignty issues.

A proposed “simple” architecture

Let’s imagine building an ALE system in a way that seems straightforward. The proposed architecture might look something like this:

- We create a key for each customer in our AWS KMS and set each to auto-rotate.

- If a customer wants to hold their own key, we’ll put the information for how to connect to their AWS KMS key slot into our database, and we’ll just call theirs instead of ours (presuming they’ve configured access for our AWS instance).

- We just need to know for each tenant which key slot and AWS server to call out to.

- We can even require that they keep their KMS in a specific region or regions to make things more performant.

- We will use storage-level encryption capabilities like:

- S3’s server-side encryption with customer-provided keys (SSE-C) and

- MySQL’s native encryption so we can still use built-in functionality on encrypted data.

These seem on the surface like reasonable choices. It offers a path of least resistance to get an ALE feature up and running. Since Amazon manages the keys and our storage options have ways to encrypt the data with those keys, it seems straightforward. But there’s a lot here that’s problematic.

Hidden complexities with key management

Let’s look at the problem of keys first. Developers often think, “well, I can just store keys in my local key management server (KMS). Problem solved! AWS does everything for me.”

In fact, KMSes do a lot of work, and leveraging them is smart. But like those encrypt() functions, they’re low-level components that need to be managed and used properly as part of a carefully designed and implemented system.

First, if you fetch keys from a KMS, you’re already doing it wrong. Any key in a KMS should be created there and should be marked as non-exportable, meaning it can’t be downloaded even by an admin. This is best practice. To use the key, you call the KMS and ask it to encrypt, decrypt, or sign something. Why? Because the KMS has the best security in your architecture, and if you can prevent keys from floating around and possibly getting copied or compromised, you can establish assurances about data, know who has accessed what and when, and can ensure no one walks away with the keys to the kingdom.

Once you’re using the KMS to encrypt and decrypt data, you’ll immediately run into trouble with bigger files. Most KMSes won’t support them. And even if they do, your performance is going to be poor while your costs get high.

Another point: when you’re using infrastructure-layer encryption, it’s fine to just point back to one key and call it a day. But with ALE, that’s not best practice. That’s partly because of the points above, but also because reusing a single key over and over can lead to problems. For example, it increases the possibility that you’ll have an IV collision where the same key and IV are used for different inputs, though if you’re using proper secure number generation this risk should be pretty low. People who reuse keys often can also mistakenly use them in different ways as a secret for different algorithms, for example, which is a huge no-no.

To avoid these problems and others, experts recommend an approach that uses a unique and random key per piece of data (field, row, or file) and a unique and random IV as well. This is a pattern called envelope encryption that uses random document encryption keys (DEKs) to encrypt the actual data. Each bit of data has its own DEK and IV. The DEK is then itself encrypted with the master key (the one in the KMS), becoming an “EDEK.” The EDEK then needs to be stored alongside the encrypted data. This is the gold standard approach, but it adds layers of complexity to manage.

DEKs are small, so calling out to the KMS to encrypt or decrypt a DEK should be efficient.

But what if you’re fetching a bunch of rows and need to decrypt all of them quickly? Are you making 100 or 1,000 or more calls to a KMS for this one operation? Is that going to perform? How big a bill will that be for you (or your customer if they’re holding their own key)? And how long will that take? These are problems you need to solve early.

If you’re using IronCore, we take care of all of these problems. We minimize KMS interactions and keep things superfast – we’ll describe more of how that works later.

Handling key evolutions

And here’s another problem… NIST just approved some new encryption algorithms as standards for post-quantum cryptography. And guidelines on key sizes keep changing. What happens when a customer needs to move from a 128-bit key to a 256-bit key? Or from an RSA key to a CRYSTALS-Kyber one to meet post-quantum policy or legal requirements? That isn’t a simple rotation within a key slot. It requires a new one.

At a minimum, the design now needs to account for two KMS key slots per tenant and then needs a mechanism for either using both the old and new over time (old data decrypts with the old key, new data encrypts with the new key), or for re-encrypting all the data by decrypting with the old key and encrypting fresh using the new one. But you’ll probably need to be able to do both things to handle different use cases. (IronCore supports both key evolution cases, where the old keys stay available, and where the old keys won’t be available. IronCore supports not just two KMS key slots, but an arbitrary number).

But wait, how do you identify which KMS key slot something was encrypted with? You need to know this if you’re going to re-encrypt data or have multiple possible master keys, only one of which can decrypt a particular piece of data. Did you put a header on that data with an identifier so you can know how it was encrypted, how to decrypt it, or if it needs to be re-encrypted?

The original design now needs to put headers on ciphertext so it can be decrypted in the future.

But wait, adding ciphertext headers isn’t possible when using storage-level encryption like with MySQL or S3 SSE-C. Certainly there’s no baked-in mechanism, and it’s non-trivial to hack one in, if it’s possible at all. With SSE-C, you can’t fetch the data without the right key, which makes a chicken and egg scenario with that header. The design needs to expand to accommodate not just EDEKs, but also tracking metadata indicating how something was encrypted and with what key. And that data may need to be stored differently for different data stores.

You also need to consider transition points with a customer going from using a key you manage to holding their own key. You need to consider the impact on your infrastructure and the amount of compute time required if you have to re-key data (IronCore provides efficient ways to handle this). You need to consider how it works if a customer that was initially set up in one country (say the U.S.) wants to shift to another country and how you manage that transition. Will you be able to ensure that their data can only be decrypted in the new country?

Using MySQL’s built-in encryption

Using MySQL’s encryption is a terrible idea. Our blog on that subject covers lots of reasons why, but consider for starters that by default, MySQL uses AES-ECB-128. That’s a mode that doesn’t even take an IV and is effectively deterministic per block. It leaks a ton of data. (Oh, and Java also defaults to ECB if you don’t specify.)

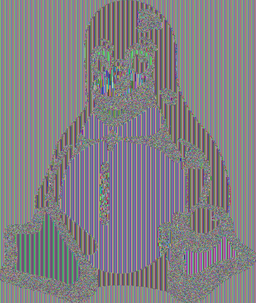

There’s a great illustration of the problems with ECB in the form of an encrypted image where you can still make out the original. Source: wikipedia block cipher modes:

Penguin image, unencrypted

Penguin image, encrypted with ECB mode

But that’s just the start – there are other inherent issues.

You might want to use a key per tenant (customer) for all of their stored data or a key per-column per-tenant so you can do nice server-side queries that, in a single SQL statement with a single key, can filter results. Already you can’t use the envelope encryption approach. Worse, MySQL makes it hard to use a per-row IV even if you’re using a non-default encryption mode.

MySQL's encryption is security theater.

With MySQL, you’re likely reusing keys and IVs across rows and maybe across columns, which tremendously undermines the security of the protected data. To understand this better, we recommend a search on “IV reuse attacks” – there are a lot of papers on the subject. Take a look at this one that bit Samsung, showing how even sophisticated companies who consider cryptography to be a core competency can mess up IVs.

As convenient as it may seem, encrypting inside MySQL will lead to many woes from security issues that you might not even realize to performance and scalability issues that you certainly will notice. So now you need to modify your design to encrypt in the application before sending the data to MySQL and to find ways to efficiently filter and find data. (IronCore has a lot of recommended strategies and tools for implementing those, from encrypted search to exact match filtering to post-filtering patterns).

S3 SSE-C considerations

Amazon’s SSE-C is a good product and a reasonable choice, but there be dragons. It has hidden complexities and pitfalls.

For example, do you use pre-signed URLs? How will you use them with SSE-C? Are you going to hand out the unencrypted keys so an end user can see them? If you are following best practices and have a unique key per file then perhaps that’s acceptable, though giving someone a key that lives beyond their right to access a file isn’t great. And if you are reusing keys, you’re in even bigger trouble now and that pre-signed URL with included key is a vulnerability.

Or maybe you avoid using pre-signed URLs and instead have your app stream all S3 data back out to the customer and have your app also accept uploads and then re-upload them to S3 in a second step? That’s not great for performance, memory requirements, disk requirements, failure modes, and more.

Consider what happens if someone moves a file in an S3 bucket to a new location but forgets to update the reference from the related EDEK, which has been stored in the SQL database? (We’re assuming here a modified design using per-file EDEKs stored in the DB, because after everything we’ve said so far, you’d never re-use keys across your S3 objects, right?)

A file move in S3 that didn’t also trigger an update to the metadata in the database would likely make the moved file into a virtual paperweight – no longer useful except for taking up space. Perhaps you could get around this by using UUIDs for all file names and then making a policy forbidding any renaming, but that’s also a brittle approach, and it’s one more thing for the list.

The point is, S3’s encryption offerings (unlike MySQL’s) are pretty great, but using them takes a fair bit of careful design and coding. These are just some of the pitfalls we’ve seen. IronCore offers an S3 proxy that handles all of this automatically without any code changes. The proxy makes use of SSE-C so that AWS functions can be utilized over S3 data. This is one of the few places we don’t encrypt before sending to the storage layer and trust the storage layer to see a key, use it, and then immediately drop it from memory. But we use SSE-C correctly to avoid the problems mentioned above. And for those who don’t want to trust Amazon that far, we provide ways to fully encrypt before sending, too.

Customer KMS connection info and consequences

Now let’s talk about the choice to store the access information for the KMSes in the database, whether they are for your customers’ KMSes (in the customer-held key scenario) or your own KMSes with per customer key slot info (for those customers happy to let you hold the key for them).

If someone compromises the database server and finds the connection info, can they decrypt the encrypted data? In most cases, yes. Your customer has likely granted your entire infrastructure the ability to access their key slot, which means their key can be utilized with calls from a compromised database server or app server without issue. It turns out that holding key access information in the database is tantamount to keeping the raw keys there.

Okay, so maybe you can use AWS secrets manager or parameter store to lock that down and separate the configuration information from your database? That’s a start. But can’t your admins still view what’s in there? What if an admin is subpoenaed for that info? What if Amazon is?

Forget subpoenas, what happens if an attacker gets a virus on an admin’s computer and it steals all their cookies from their browser, bypassing MFA? That’s not a hypothetical, it’s one of the top causes of breaches (source: Cost of a Data Breach Report). And with that, your ALE and your customer data is pwned as badly as your infrastructure. What was the point of adding an extra layer of security?

That wouldn’t happen with IronCore’s solution, because we protect the KMS connection data even from your admins. Customers enter the data and then encrypt it in their browser before uploading it. We make it extremely difficult for anyone except the person who entered the data and their co-admins to see those values. We only make it available to the dedicated systems that orchestrate keys.

Customer-held keys, data sovereignty, and performance

We mentioned problems with tons of calls to a KMS and the resulting performance and cost issues, but how does that change when you need to address data sovereignty issues?

For various reasons, some customers will operate in countries concerned about, for example, the U.S. or China gaining access to their citizens’ data. In the U.S., this plays out through FISA courts and secret subpoenas. Non-U.S. citizens have no privacy rights under U.S. law so law enforcement can request any data they want without a judge needing to weigh privacy concerns or 4th amendment issues. Those non-U.S. citizens have privacy and due process rights within their own country, but these are bypassed when a U.S. company holds their data and U.S. law enforcement wants access to it. And this is why so many countries are passing data sovereignty laws designed to ensure due process and privacy protections for access to their citizens’ data.

It turns out, you can build a system that uses server-side encryption to protect data, to stop insiders from viewing it, and to ensure that any access to the unencrypted data requires the courts of the country with the data sovereignty law to get involved in a process that uses something called MLATs. The U.S. and other countries can still get access to the data through cooperation with foreign law enforcement counterparts.

We spell this out for a reason: Amazon, Google, Microsoft, and Oracle are all U.S. companies. They hold keys and run infrastructure for a majority of data held by software companies in the West. They and you can both be subpoenaed. To meet data sovereignty requirements in, say, Germany, you need a German company to hold a customer’s key. But you cannot do that when you’ve chosen to only work with the AWS KMS.

So maybe you think, “it’s fine, when a customer really needs this we’ll add support for connecting to other KMSes.” Not so hard, right? If only.

You’re probably not realizing that you’re introducing potentially huge performance and cost issues with many calls across clouds and across countries and oceans. You’re making your service more brittle, too, when there are network issues somewhere in the world. And now you need your code to become a bit more abstract about how it calls out to KMSes. You’ll need to refactor everything. And then you’ll realize that different KMSes provide different capabilities, and you have to backfill capabilities that Amazon provided but that something else doesn’t.

Now you’re rewriting your ALE stack and redesigning it yet again to handle a foreseeable evolution of needs from your customers.

And if you’re selling internationally and thinking about data sovereignty concerns, have you also considered which of your servers can see which KMS connection information and how to control which servers and employees can access it?

At IronCore, we’ve figured out how to handle data sovereignty, distant KMSes, a heterogeneous set of KMSes across customers or even within a single customer, segregating keys and configuration information by region, and more.

And we’ve figured out how to do it at scale and with low latency.

For example, we have batch end points for quickly handling decryption of lots of rows. We have leased keys to buffer against network slowness. We have secure caches. We pre-warm the caches so everything starts fast and stays secure. We’re still just skimming the surface here. These problems are hard and they’re deceptively deep.

Secure memory and control

DEKs, when unencrypted, should never hit disk and shouldn’t stay in memory for a moment past their use. Seems easy, right? But wait, what about swap space? And how can you make sure that when freeing memory, keys are actually gone? If you’re in a JVM, you can’t even overwrite strings (they’re immutable behind the scenes) and secrets could hang around for ages until they eventually get garbage collected. To avoid lots of secrets hanging around in memory or on disk, you need to code your systems in a lower level language like C or Rust. (IronCore uses Rust, though we have SDKs in numerous languages).

More tricky problems

It’s a much, much deeper well than it seems at first. After doing this now for years for large companies with some of the largest customers in the world using our HYOK solution, we’ve found and handled endless tricky problems buried in this seemingly simple world of an ALE crypto-system.

We include user interfaces which guide customers to do the right thing when setting up keys, workflows, lifecycle management, key tracking, rotation handling for keys, KMSes and algorithms, and efficient re-encryption. We offer your customers (who don’t have to know we’re involved) the ability to get real-time audit trails and security events directly into their log sink for compliance and assurance purposes.

And we offer encrypted search for finding encrypted data given an encrypted query, even with wildcards and autocomplete, and even in AI workflows using vectors – all without having to decrypt anything but the final search results.

The dark and costly DIY path

The longer you go down a DIY path, the greater the sunk cost and the bigger and more difficult your future problems will become. You’ll be less flexible, less featureful, and it will end up costing millions to build and maintain. It’s not your core competency, and shortcuts that are taken will ultimately show up in the security of the system and the performance of your product – particularly as it evolves over time.

This is not functionality you should build or forever maintain yourself. Don’t even put it off with a stop-gap. It may be a year or even a couple of years before your decisions really bite you. It is an absolute certainty that over time it will cost you significantly more and create unnecessary friction with security-conscious customers (both existing and future prospects) if you build it in-house. And if you feel you’ve already invested so much that you’re committed, think again. The DIY solution will be a weight around your neck for as long as you need to maintain it – which will be forever.

When you use IronCore SDKs, you’re calling into a full crypto-system that has been built with huge attention to security, performance, recoverability, lifecycles, portability, and crypto-agility. Our solution is great at this stuff. It isn’t zero effort, though. You’re still implementing ALE, and there are implications for that and probably code changes, too, depending on what you need to do. Just don’t make the mistake of confusing the effort required to use a polished, high level solution with the effort required to use low level tools to build your own.