The Encrypted Messenger Wars

Telegram vs. Signal: what really matters

If you haven’t been tracking it, the CEO of a messaging app company, Telegram, wrote a post telling people that they shouldn’t trust Signal, a competing app run by a non-profit company dedicated to privacy, and implying that messages on Signal can be read by the U.S. Government. Elon Musk joined the fray on X, saying that Signal had refused to fix known vulnerabilities for years and that he found them suspicious, too. I’m not going to rehash the whole thing, but you can get the details and links to the relevant threads at Business Insider.

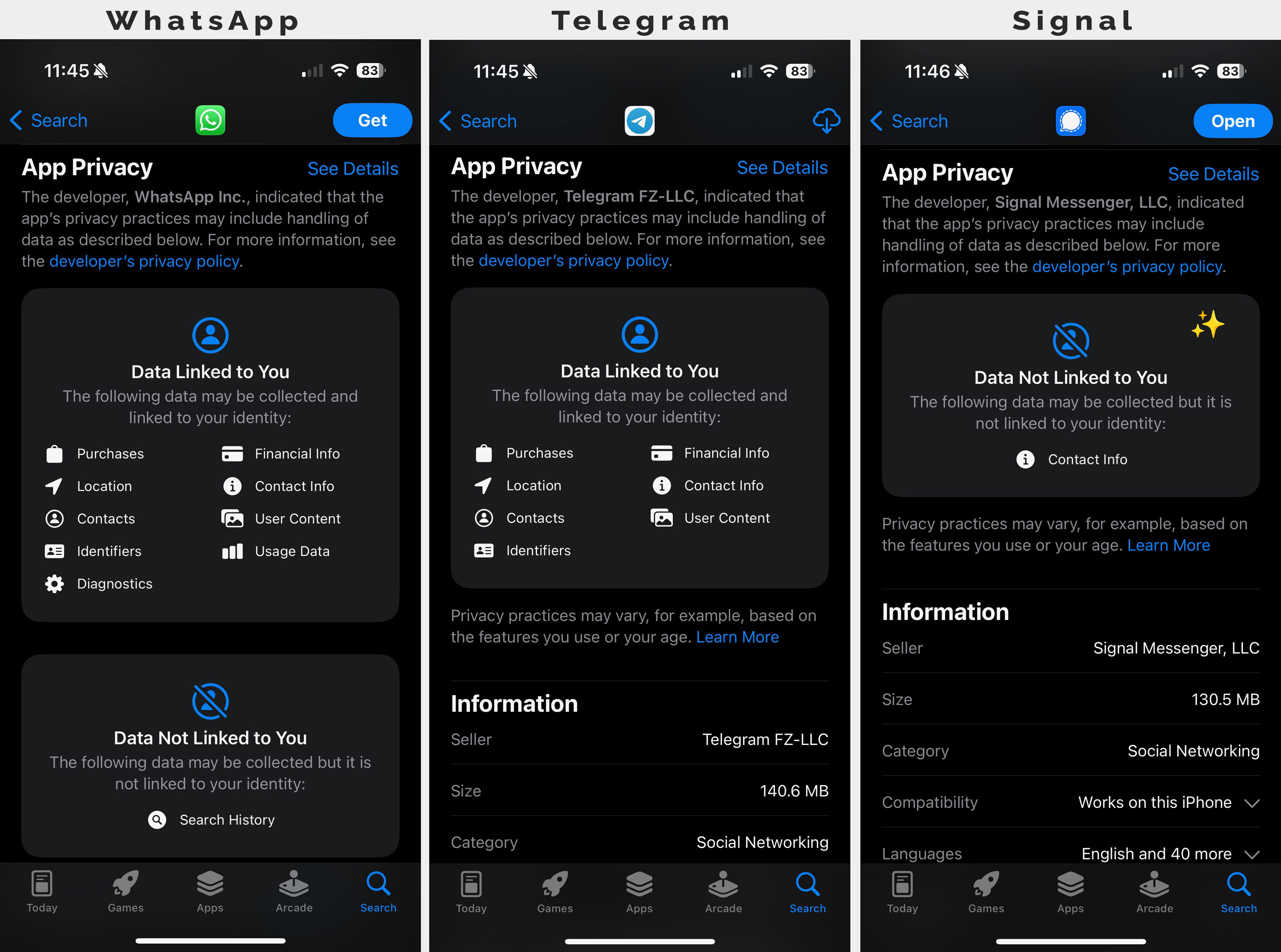

That story is about a for-profit app with a default of insecure messages throwing shade on a company whose entire purpose is to serve privacy. I’m on Team Signal here, as I’m familiar with their fantastic protocol which enjoys the high regard of top cryptographers. The Telegram encryption, on the other hand, is widely derided. And the bologna being thrown around by Musk about known vulnerabilities is provably false because of Signal’s transparency. And just for fun, let’s compare the App Privacy disclosure for them on the Apple App Store:

But look, use Telegram or Signal or WhatsApp or whatever makes you happy that you trust. If they support end-to-end encryption, you’re already winning as a consumer. I’m not going to go any deeper than the above on which is the most trustworthy. Instead, I want to talk about what I see as way more important: why so few apps provide strong security and why many of those default to having it turned off. Because this is the real problem.

Security by design and by default

I worked for years building network security solutions – intrusion detection and prevention, anti-virus, VPN, and I ran threat research teams around the world. Ultimately I came to believe that there is no way to secure everything by defending the perimeter. The best jumps in the war against malware have come when operating systems have added protections against certain classes of memory attacks and started enabling features where only signed software could run on a machine, for example. Some of our best hope for the future comes from programming languages like Rust that make entire classes of vulnerabilities impossible by construction.

The principle of security by design is simple: when architecting software (or operating systems or programming languages or whatever), ensure that security is as core to the design as user experience, monetization, performance, and scalability. Seems simple and obvious, but it’s sadly uncommon to see security by design. At big companies, you often have one person architecting the software and then later in the process a security architect comes in to review the designs. That’s not the worst, but at that point, many decisions have already been made and the reviewer generally can tweak but typically won’t upend the entire apple cart. Which is too bad since early decisions about tech stacks and other things can greatly impact the security of the resulting software.

The principle of security by default is also simple: where strong security is possible, turn it on out of the box. A user may be able to turn it off, but they don’t have to turn it on. This also seems sort of obvious, but let’s look at some big companies and how they handle security:

- Zoom: after getting caught claiming to have end-to-end encryption when they didn’t, as part of an FTC settlement, Zoom promised to add end-to-end encryption. They bought an encryption company and hired some great people to build the feature. Then they made it off by default and didn’t invest in ways to make basic functionality work with it, so even folks like me (privacy advocates) rarely turn it on.

- Ring: after finally adding end-to-end encryption to a few devices in their line, Ring made it hard to enable and when on, it disabled important features that minimize false alerts, among other things. And it’s off by default even for those who don’t use the incompatible features.

- Telegram: unencrypted chats by default, and they collect a ton of data.

- X: last year, after another FTC settlement and commitment to add end-to-end encryption, X finally released encrypted direct messages. But they’re off by default. If and only if the two parties are verified and meet other requirements, there is a toggle switch that must be pressed before the DMs are encrypted. Groups aren’t supported yet. That’s not security by default.

And we’re still seeing database products with no authentication required by default despite the lessons of history with MongoDB and Elasticsearch, who learned to stop doing that the hard way.

It’s sort of funny that Musk is throwing Signal under the bus when they have about the best security you could hope for and X makes it actively difficult for users to achieve any sort of privacy.

Security by design and by default seems obvious and there’s absolutely no excuse for companies not to follow these principles. (And in fact, there are even regulations, like GDPR Article 25, that require some version of it). Yet here we are.

Privacy by default > privacy options > no privacy options

I continue to be disappointed by the attacks being made on excellent software like Signal. But frankly, even attacks on Telegram are a little annoying. Yes, I see my hypocrisy here. I just criticized Zoom, Ring, Telegram, and X for not enabling privacy and security by default, but really, I’m more likely to use those products than many of their competitors who don’t even have options for privacy. They’re miles ahead. Zoom, for example, at least has the option of end-to-end encrypted video conferences whereas Google Meet and others do not.

We all want better security and privacy. Ultimately, we all want perfect security and privacy. But perfect can be the enemy when engineering teams feel immediate perfection is too costly and difficult. This ignores the incredible reductions in risk and improvements to privacy that can come as companies embark on the path towards more private systems. Incremental progress is important progress. For example, although end-to-end encryption is always preferred from a privacy standpoint, server-side application-layer encryption with per-user (or per-customer) keys can still protect against insiders peeking at data, which is way better than most cloud software. I’d be happy to see more companies doing that.

Incremental progress is important progress.

When we take aim at the best security in the industry and point at ways that it falls short of our ideals, we shoot ourselves in the foot. Yes, we want even better and that’s okay, but we have to acknowledge that the minimum bar right now is no bar at all. Perfect privacy and security is often extremely difficult in a world of interconnected systems and collaboration.

This Signal vs. Telegram “crypto war” is a side show. The real war is in fighting against apps that don’t have reasonable encryption at all.