Vector Encryption, AI, and the Slow Pace of Standards

When Will NIST Bless AI Data Protection?

We’ve turned academic research in cryptography into usable and useful libraries a few times now. We built out a unidirectional multi-hop proxy re-encryption scheme, wrote papers on what we changed and how we applied it to bake access controls into data for end-to-end encryption and other use cases. More recently, we’ve released tools to take advantage of distance-comparison-preserving encryption, bringing privacy to AI data including training data, models, and vector search indices.

One of the most common questions we get when we work with non-mainstream cryptography is about whether or not it’s a standard or whether NIST has issued an opinion on the approach. And these are good questions.

NIST standards history and recent changes

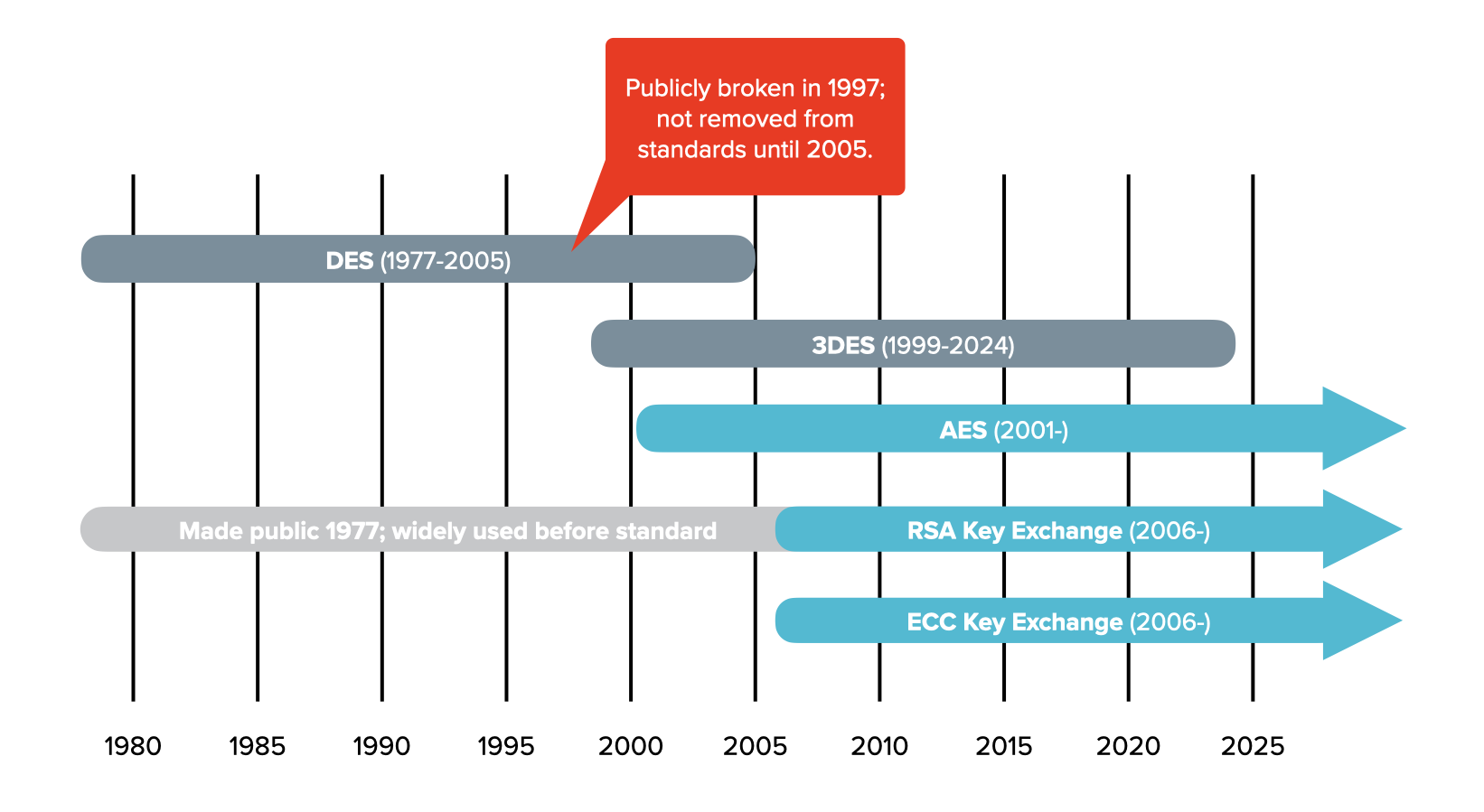

It’s wise to be distrustful of new cryptography and especially so if the algorithm is secret (though that’s not the case with anything we do). The best cryptography has been attacked and refined for years or decades, which increases confidence in its security. That’s why we collectively still use AES, ECC, and RSA (though two of those three arguably should have a deprecation timeline due to quantum computing). AES has been around since ~2000, and RSA goes back to the 70’s.

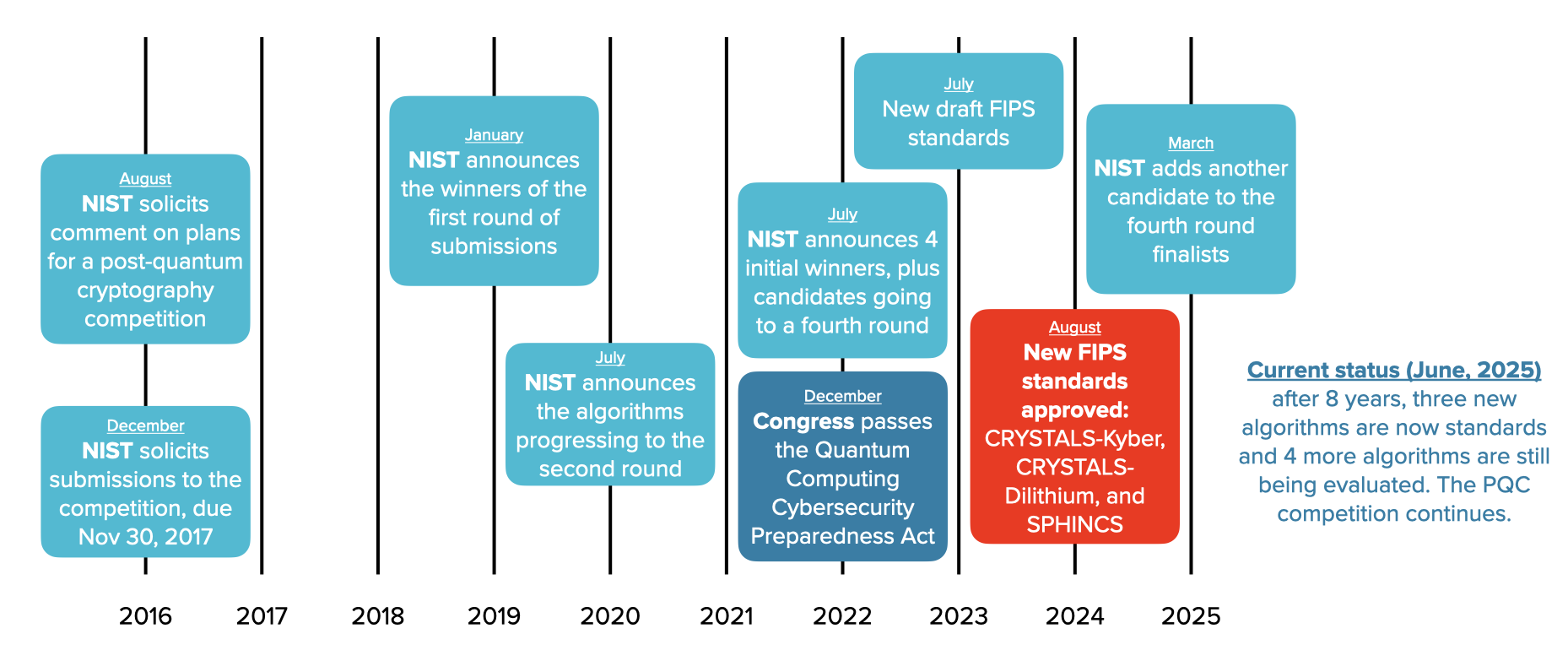

After 20 years of nearly static standards, the prospect of more powerful quantum computers together with presidential edicts finally spurred NIST into action creating new standards. To do so, they created a Post Quantum Cryptography (PQC) competition that started in 2016 and saw its first standards in 2024 (with more theoretically on the way). But this cryptography is a direct replacement for existing algorithms to exchange public keys and generate digital signatures.

Privacy-enhancing cryptography and AI

Usable, privacy-enhancing encryption, such as fully or partially homomorphic encryption, is a newish covers many different algorithms with varying degrees of security and trade-offs. I don’t believe NIST has allowed encryption-in-use of any kind to become a standard, but in 2019 they created a working group to study these techniques (the PEC). Unfortunately, the group doesn’t have a goal of creating standards around privacy-enhancing cryptography so it’s more like a show-and-tell series.

Separately, NIST has been doing some work on AI security, putting out a draft security framework, and separately a taxonomy of attacks and mitigations. The latter focuses entirely on models and neglects things like training data and vector embeddings, but I expect it will continue to develop and evolve.

Big standards bodies and government agencies like NIST move slowly in most cases. Their work on AI has been fast, but has not resulted in standards. Their work developing new standards for post-quantum is some of their fastest progress ever, but that process is now in its ninth year and still going (to be fair, they got some standards out in year eight). But the White House and Congress were focused on the problem of quantum computers, and the pressure to be ready with quantum resistant algorithms was high.

Without pressure from Congress, it’s unlikely NIST will develop standards around AI encryption or vector encryption – or any privacy enhancing cryptography – in the near future, for years to come, or possibly ever.

Which leaves those building AI systems that utilize private data in something of a bind: how do you protect the data while waiting years for standards to develop? What do you do in the meantime, and without someone like NIST, how do you know what approaches and implementations are good?

Useless infrastructure-level encryption vs. application-layer encryption-in-use

Random encryption (AES-256-GCM, for example) is obviously a good choice to fully protect the data when it’s being stored and not used. But in modern cloud services, the data is being used continuously in services that run around the clock. Random encryption gets applied to the data at low layers, like the disk or storage layers, which only really keeps the data safe when hard drives are removed from servers.

Which brings me to IronCore’s approach. IronCore did not invent approximate distance-comparison-preserving encryption (DCPE), but mined the literature to find good approaches to protecting vectors and AI data. We pored over the proofs to understand trade-offs between papers, and we tried attacking different approaches to understand their shortcomings before we finally selected the scale-and-perturb algorithm that underlies Cloaked AI. We are transparent about our findings, and are frank about the trade-offs of this approach, which I’ll touch on below. But most importantly, it brings application-layer encryption to AI data, which means that when (not if) there’s a breach, the sensitive data remains protected.

Benefits of DCPE

We believe that today, there are two viable approaches to protecting AI vectors while they’re in use: fully homomorphic encryption (FHE) and DCPE. FHE, though, is only viable with custom vector database servers, and the performance implications make it unusable for most use cases and for large datasets.

With DCPE, you can prevent membership inference attacks, inversion attacks, attribute inference attacks, and more. If you use DCPE to encrypt training data, you can create models built on encrypted data that require the right encryption key to use. And you can prevent insiders like the ML Engineers from seeing what may be very sensitive data of customers. It’s a huge boon for privacy and data security since so much data is being copied into AI and because most of that data can be extracted back out.

DCPE is the only option that we know of today that can protect AI data in use without specialty vector database servers or specialty model builders and runners. It just works with existing infrastructure and programs.

Security of DCPE

So what about the security of DCPE? Can it be trusted? It leaks information about what vectors are near what other vectors, but gives no way to figure out what those related vectors mean. DCPE is also weak against chosen-plaintext attacks, which means the attacker is able to associate a large corpus of plaintext with the corresponding ciphertext under a particular key. The attacker can make a model using this information and use it to roughly invert the encrypted data, though only with partial success since the results are much less precise than an attack against the unencrypted vectors. We have more details on that attack elsewhere including in our DefCon 32 talk on vector encryption. Luckily, keys are typically held tightly in KMSes or HSMs and an attacker using a chosen-plaintext attack would need to have some way to be able to use the key in order to build a corpus that would allow them to partially defeat the encryption provided by that key. This is a pretty reasonable trade-off because keys are one of our best protected pieces of data in most infrastructures.

But how bullet proof will it be into the future? The roads of cryptography are littered with algorithms that were once thought to be secure and later found to have a weakness. DES (the original Data Encryption Standard) became a NIST standard in the 70’s, was completely broken in the 90’s, and was finally removed as an acceptable algorithm in 2005. We point this out to show that our knowledge of cryptography – even standards – is a dynamic thing. It is also to show that standards should not be blindly trusted because the slowness to adopt standards also manifests as a slowness to deprecate them (a decade late in this case). DES should have been deprecated the same month it was proven to be broken.

We don’t currently know of any other attacks against DCPE (and nor do average hackers), but we do know that DCPE is very effective at preventing attacks on vector embeddings.

Security agility

AI is a fast moving space with rapid adoption across many industries. It is incumbent upon implementers who are working with sensitive data to protect that data using the best technology available. It’s possible that something better than DCPE will come along or that new flaws will be discovered in the coming years. Meanwhile, it provides a ton of privacy and security and is a far better option than leaving data unprotected while waiting for standards to emerge. Today, DCPE is the best available option. No public attacks doesn’t mean there are no possible attacks, but it does mean that most or all hackers who gain access to DCPE-encrypted data today will find it completely useless.

In the end, we believe it is better to protect AI data than to do nothing, understanding that we must be agile in continuously evaluating the landscape of AI threats and protections.