Training AI Without Leaking Data: White Paper Download

How Encrypted Embeddings Protect Privacy

How Encrypted Embeddings Protect Privacy

Learn how to train AI on sensitive data without compromising privacy. This paper introduces one-way vector encryption as a practical technique for securing training data and the models built on that data. This paper explains how it works, when to use it, and why it’s a powerful tool for building privacy-first AI systems.

White Paper Sample

Get the white paper now, for free

Privacy

Private training data

Sensitive AI training data, such as the private data of customers, can be one-way encrypted at the source and never decrypted. Models can then be trained on top of the encrypted data.

Security

Secure models

By encrypting the training data, you effectively encrypt the model, too, since you can't meaningly use the model unless you can encrypt inputs with the same key used on the training data. A stolen model is a non-event since it can't be used and no data can be recovered from it.

Flexible deployment

Works with any model training framework

Once the data is encrypted, any framework used for training neural networks in any programming language or OS can work with it. No limitations on model design or tools.

Deep learning with security

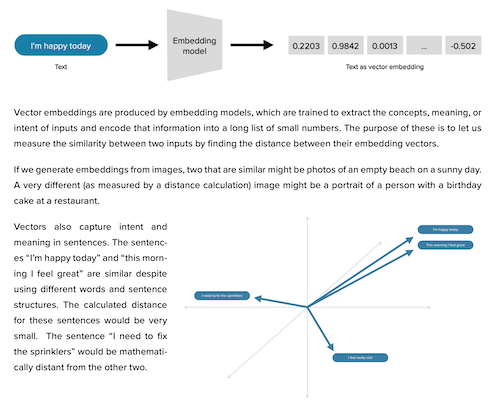

An explainer on building models over encrypted vector embeddings

Download the white paper to better understand when inputs can be encrypted, what types of inputs and what types of models are best suited to vector encryption. Learn and understand how AI makes data less secure and how you can counter those problems with encryption.