Highlights From Real World Crypto 2020

If you’re interested in how research around privacy and security is turning into real-world solutions, this is the best conference of the year. Each time I attend, I come away inspired by the work that’s being done to make our digital world better.

There were around 650 attendees this year with a 50/50 split between academic cryptographers on one side and engineers and researchers from industry on the other. The focus of the conference is on real-world applications of cryptography, real-world attacks, and real-world problems from policy to maturity of developer tools.

On Things That Are Broken

TLS 1.2 and Below

TLS 1.3 has been out and used in the wild in draft form since 2016. The final version was put out in August 2018. TLS 1.3 is faster and more secure than previous versions and so far, adoption is relatively low, but the pace of adoption is much faster than TLS 1.2. Around 15% of the Alexa top 1 million websites have support for 1.3 and around 5% of all websites do.

In addition to adoption statistics, we were treated to a presentation showing timing attacks against TLS and more specifically, problems with RSA Key Exchange and padding. Although TLS 1.3 doesn’t support RSA Key Exchange (good!), the researchers were able to perform downgrade attacks on connections so that clients fell back to a vulnerable TLS version. Two steps forward, one step back.

SHA-1

SHA-1 was shown to be insecure back in 2005 (it had a good ten years before that), but it continues to enjoy widespread use in places like git and gpg. Researchers presented yet another attack showing how practical it has become (for around $45k in computing time) to produce a chosen-prefix collision. As an example, they constructed a GPG key that had the same signature as an existing GPG key.

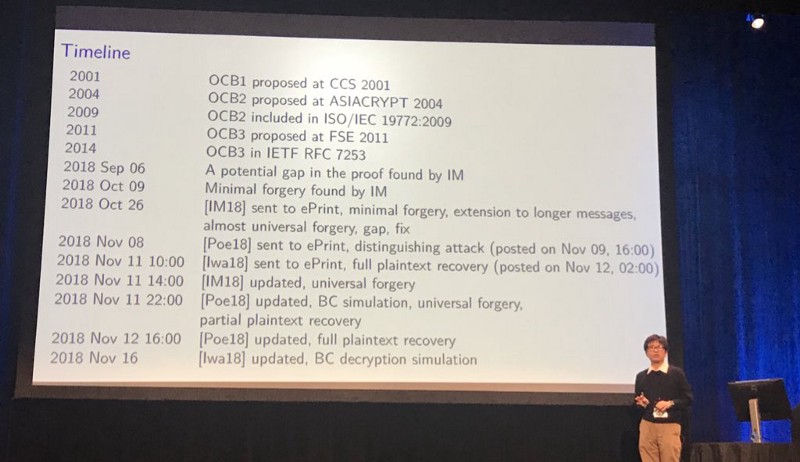

OCB2

OCB2 was thought to be secure for 14 years and was included in at least one ISO standard. Then, in 2018, a paper pointing out a possible flaw in the security proof spurred a series of attacks and papers — 8 in three months — starting with a forgery attack and culminating in a full plain text recovery attack. The story here is less about the flaws of OCB2, which is built on sound principles and shouldn’t taint OCB1 or OCB3, and more about the scent of blood in the water that caused a number of research teams around the world to race to break the algorithm.

A cryptanalytic frenzy on OCB2

A cryptanalytic frenzy on OCB2

Unnamed Hardware Security Module Vendor

Some “major HSM company” that protects the secrets of large organizations and Western Governments has been pwned every which way. The company is unnamed but there are only two major HSM vendors left in the world and only one of them released patches around when this research came out.

In short, they apparently spend all of their 3rd-party audit money testing how well the hardware protects secrets from hardware attacks, but standards like FIPS 140–2 don’t require them to test the software of their systems.

After these researchers showed they could remotely break in, extract all secrets, and replace the firmware with their own, I bet this will change.

The Encryption Wars

Every administration since President Clinton has attacked strong encryption, unfortunately, but Jennifer Granick of the ACLU reminded us that “the Government” is not one unified entity waging war here. The State Department wants tools that protect human rights, the FTC wants to protect privacy, and parts of the Department of Homeland Security such as the CyberSecurity division believe strong encryption is needed for our security as a nation.

Unfortunately, Attorney General Barr has turned encryption into a signature issue and this time they’re moving beyond the terrorism argument and pushing the child-abuse argument instead. Nevermind, as Jennifer points out, that there are measures and money for this problem that are sitting on the sidelines right now.

Jennifer also made the point that a focus from the community on encryption alone is myopic. We should be worried about metadata collection, warrantless access to commercial data, collected data retention times, law enforcement hacking, and a host of other issues.

This talk had a number of great points and included reasonable discussion of trade-offs and the ongoing research into ways to better handle the downsides of strong encryption.

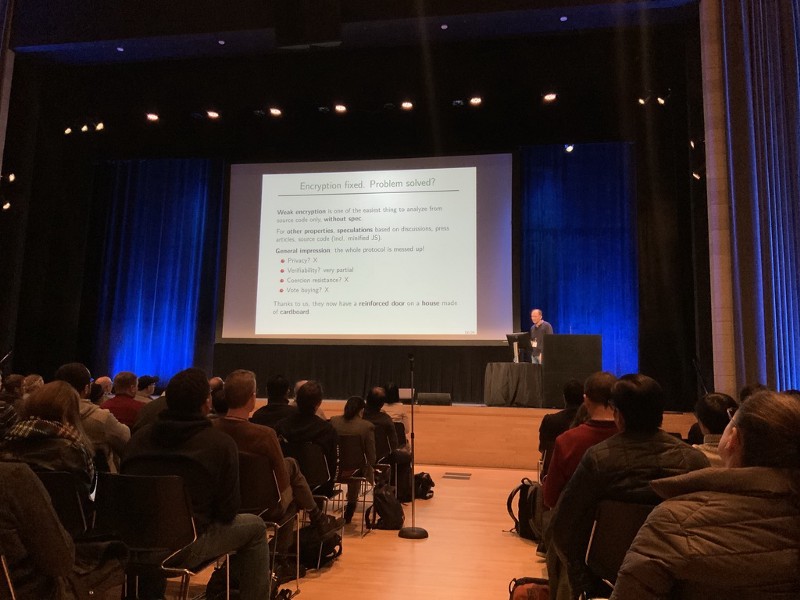

Internet / Blockchain Voting

Internet voting on the blockchain keeps getting shredded by security researchers. There were two talks in the conference on different systems and countries. The first country, Switzerland, aborted plans to use online voting after researchers found a multitude of issues. The second country, Russia, pushed forward and did it anyway, including major protocol changes pushed out two days before the election.

Quote of the day: “Thanks to us, they now have a reinforced door on a house made of cardboard,” said Pierrick Gaudry regarding the Moscow voting scheme. Oh, and the blockchain that was used in this one completely disappeared a few hours after the election was over.

On Side-channel Attacks

Once thought to be impractical for real-world attacks, side channels are the gift that keeps on giving. If you’re not familiar with this class of attack, basically it encompasses anything that involves observation of some facet of a running machine, such as the power usage, how fast some request is processed, the sound of fans, or whatever, and leverages that information to extract cryptographic keys.

The conference had a host of presentations showing just how practical these attacks can be.

For all of these attacks, using crypto code that is invariant in regards to secret data — that is, software with no if statements, variable length loops, etc., if that code does any operations using private cryptographic keys — is the best solution. Then code pathways are always the same, timing is the same, caches are the same, and nothing can be learned through side-channels. IronCore’s recrypt-rs library uses constant-time code. So does BearSSL. Sadly, very little else is built this way, mainly because it’s very difficult. And here are some of the consequences:

Trusted Platform Modules (TPMs)

These are chips inside your computer or other device meant to securely hold onto secrets and protect the operating system. These are meant to be resistant to hardware and software attacks. Unfortunately, the bulk of deployed TPMs come from two manufacturers: Intel and STMicroelectronics. Researchers broke both of these using practical side channels.

Pseudo-Random Number Generators

In computing, when we go to use a random number, we’re actually using some small amount of hopefully random entropy as input, and a function that produces a long stream of random-looking output. This is a Pseudo-Random Number Generator (PRNG) and with regards to cryptography, these are everywhere.

This year I learned that these, too, can be subject to side-channel attacks. And in many cases, if you can reverse the PRNG, you can break the crypto without breaking the crypto. Ouch.

SPECTRE

SPECTRE is a class of attacks that were in the news a lot a couple of years ago. It stands for “speculative execution” and takes advantage of an optimization in CPUs that has them calculate results down two branches of code before discarding one of them as a way to speed up processing. Attackers figured out how to exploit this by indirectly testing the cache for the branches not taken — branches that should be illegal to take.

The talk on SPECTRE was frightening because the bottom line from it is this: we haven’t yet fixed the problem and we don’t have any line of sight to getting it fixed either. Some pieces are better now, but others still aren’t. Not only that, but new attacks like NetSpectre make it practical to remotely exploit the problem. And if you combine that with distributed SSL termination endpoints like the ones hosted by Google, Amazon, Cloudflare, etc., the result is wholesale theft of private SSL keys. Scary!

On Privacy Preserving Protocols

Apple “Find My” Service

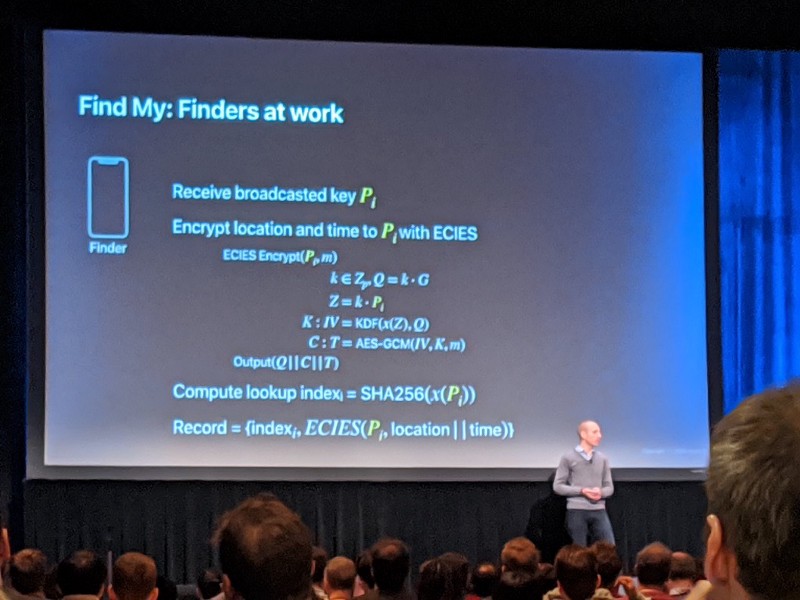

An Engineer from Apple explained how they help people find devices using all iOS devices everywhere as “finders” reporting what’s around them without compromising the privacy of the “finder” or the devices being found.

Today, your device has to be alive and connected to the Internet when you go to the “Find My” service to see where your devices are at. You effectively send them all a message saying “where you at?” and a map displays the results as they come in. If they come in.

The core idea in their new approach is to have rotating key pairs that are deterministic in nature. So if you want to search for your device, you can know what key pairs it will use for any 15-minute time block. The public key is the broadcast identifier. Finder devices encrypt a location to the public key when a beacon is seen. Only one of your devices can see what location was encrypted. Apple sees things like IP addresses but promises not to store that information for long (and they likely have other services with lower privacy guarantees in that regard).

Apple’s privacy-preserving device location tracker

Apple’s privacy-preserving device location tracker

Facebook Messenger

Facebook seems pretty serious about making Messenger end-to-end encrypted and to bring all of the features of the current Messenger along in privacy-preserving variants. Today, they have a “secret” mode, but it only works for one-on-one and without many features like the ability to share photos.

This talk was more a discussion of the requirements and problem statements. It seems they’re still early in the process and likely years away from delivering here. But they’re saying the right things, even if those statements are only scoped to Messenger and not the broader platform. Here are some quotes that caught my attention:

- “While Facebook has traditionally focused on creating a small town square, with Messenger, we want to create an intimate living room.”

- “Privacy is a human right.”

- “Having strong end-to-end privacy guarantees is a top goal.”

- “Getting security right is a scaling challenge.”

- “More engineers writing more code just makes more opportunities for bugs to be introduced.”

- “Need frameworks and APIs that are secure by default.”

- “We are going to ship this… this is happening.”

Stolen Password Checks

Google presented on how they preserve privacy while letting someone check to see if their username and password has been compromised and is available on the “dark web.” Immediately after they described their system, there was a presentation on flaws in their first protocol and in approaches by some similar services.

Passwords, like English, have frequency distributions, and a surprising amount of data can be reversed because of that fact.

Google’s basic approach is to put sets of hashed username and password combos into buckets that share a hash prefix and then to ship back more results than are necessary. They use expensive hashing algorithms (Argon2) to make it expensive for someone to essentially extract information out of their list of 4 billion compromised usernames and passwords.

The work on attacking the service suggested putting results in multiple buckets to smooth out the frequency.

Mozilla Site Blocking Telemetry

Mozilla wants to collect information on what their block lists are blocking or not in the real world. But that information can leak info about a person’s browsing habits. So they’ve cooked up a mechanism that reports a boolean, for each site on the block list, on whether or not it was blocked that day one or more times. Then that information is split into two parts that, when added together, will result in either a one or a zero and the two parts are sent to two different servers operated by independent entities. Between them, they can come up with aggregates without learning any individual value.

It gets fancier in order to protect against a malicious client that wants to mess up the stats, but the most interesting thing is the struggle they’ve had at taking a split trust approach. They’re still searching for an entity willing to help run one of the collection points, but who is completely independent, hosted on a different platform (ie, not also AWS), and in a different country/legal jurisdiction. And even then, it’s hard to guarantee someone won’t get access to both sets of data or the entities won’t collude.

On the (In)security of New Communications Standards

5G

If you’re like me, you mostly think about 5G as new infrastructure, new radio frequency range, and as bringing faster speeds. But it’s a new protocol too, and it’s supposed to bring better privacy and security in the face of IMSI Catcher devices and other common attacks against today’s 4G/LTE.

Unfortunately, there are a bunch of problems. In particular, the protocol does a good job of preventing someone from tracking you, except if they can trigger an error. And with that error condition, you become fully trackable. Fixing it “requires a major redesign.” Doh. Some of the other discovered issues have already been fixed.

As a side note, this talk was a win for formal verification methods, which is to say, formally modeling a protocol and having computers apply attack models to the protocol model to find problems.

WPA3

The Dragonblood attack against WPA3 wins the award for the best attack name of the conference. It’s another side-channel attack, but more relevant to this section. Suffice to say that researchers keep getting more clever on how to exploit non-constant time code. In this case, a network’s password, which is used to derive a session key, derived that key in non-constant time. Using a dictionary, attackers were able to profile timings, adjust another parameter (MAC address), and thereafter by passively observing timings they could quickly figure out the network password. The protocol designers were told of the potential issue early on but dismissed it as not a practical attack. Oops.

On the Rest

- There are a lot of really cool multi-party computation frameworks that allow you to code in a high-level language and compile down to something usable. Sadly, most of them are hard to use and poorly documented/written by academics. Still, a few are good and worth a closer look.

- The author of the book Applied Cryptography argued that most symmetric algorithms use more rounds than necessary for the risk models and that lowering them would have a negligible impact on risk while having a big speedup in performance. I had the distinct impression that most of the cryptographers in the audience like extra margins in their security and weren’t buying what he was selling. Odds of NIST reducing security requirements? Very long.

- Secret sharing, where keys get split into multiple parts and n of m parties have to come together to decrypt something, have been stuck in the relative dark ages ignoring all of the progress on symmetric algorithms over the years. So improvements like Associated Data, Authentication, Nonces, etc. are missing from the key shares, which means they can get corrupted or worse. Sadly, there is no implementation of the speaker’s proposed modern approach, which sounded very promising.

Summary

This got long and I didn’t even touch on half the talks. Real World Crypto is a deeply technical conference that focuses on solutions to concrete problems that work in practice — and that’s very exciting.

We desperately need more of these solutions and they need to be more widely available for use in a broader array of applications. We’ve all lost control of our data — people and businesses alike — but we can choose to vote with our wallets and back the businesses that invest the time and money required to develop applications that are private by design and by default.